Picture yourself at 10 p.m. on a Wednesday, digging through Salesforce for Q3 account metrics, cross-referencing support tickets in Zendesk, and copying engagement data from four different dashboards.

You're racing to finish your customer health report before tomorrow morning's executive briefing. Three hours are lost every week to data assembly, and half the numbers are outdated before stakeholders open the file.

Fortunately, AI agents eliminate this weekly grind. Moving from manual data collection to automated report generation removes the aggregation bottleneck, transforming how you surface insights for decision-makers.

AI agents pull information from scattered systems and build reports while you concentrate on interpretation and strategic recommendations.

To implement AI agents for report writing in your organization, here are the precise 7 steps you need to follow today.

Step #1: Identify Your Automation Target

Most organizations generate dozens of reports weekly: project status updates, account health scorecards, compliance summaries, performance dashboards, and risk assessments.

Manual compilation doesn't just consume time; it delays decisions and burns hundreds of labor hours annually on repetitive data assembly.

Start with one specific report type where AI will deliver immediate ROI. Target areas where three factors converge: high frequency, scattered data sources, and critical business impact.

For instance, in a project management context:

- Weekly status reports are sent to stakeholders every Friday

- Monthly risk assessments require data from six different systems

- Compliance documentation pulling from project files and regulatory databases

- Resource allocation reports combining timesheet data with budget actuals

Multiply these factors (frequency × data sources × business impact) to calculate an impact score. This reveals exactly where AI first pays for itself.

Track baseline metrics before automating: average compilation time per report, number of systems accessed manually, and stakeholder complaints about stale data. Complex numbers prove whether your pilot works within weeks, not quarters.

The sweet spot sits where all three criteria overlap. If you're compiling a report weekly or daily, pulling data from multiple disconnected systems, and the output directly influences budget decisions or compliance standing, you've found your automation candidate.

These reports consume the most manual effort and create the biggest operational drag when delayed or inaccurate.

Pilot one report type, refine the automation, then expand with confidence.

Step #2: Choose the Right AI Agent Platform

Most report automation failures stem from teams choosing platforms built for analytics dashboards, not for operational reporting. You need agents that aggregate data across systems, apply business logic, and distribute outputs on schedule without manual intervention.

Two paths solve this: build your own system from open-source models. Building from scratch gives you control but requires data engineering expertise and ongoing maintenance.

Alternatively, you can deploy a platform that automatically handles multi-source agent orchestration.

When evaluating AI agent platforms, three capabilities separate effective solutions from expensive disappointments:

- Data aggregation comes first: Agents must connect natively to your existing systems and sync automatically. Platforms that require weekly CSV exports or manual data uploads simply shift the bottleneck rather than eliminate it.

- Template flexibility ranks second: You need conditional formatting, threshold-based alerts, and calculation rules that work across multiple report types. If changing a formula requires editing code or contacting support, maintenance costs will exceed manual compilation.

- Distribution automation closes the evaluation: Agents should handle scheduled generation, event-based triggers, and multi-format outputs without custom scripting. Reports that generate automatically but require manual distribution haven't solved the real problem.

Test every vendor with your actual report requirements before committing. Run a pilot with one high-impact report type and measure whether agents actually reduce manual work or simply shift it to a different interface.

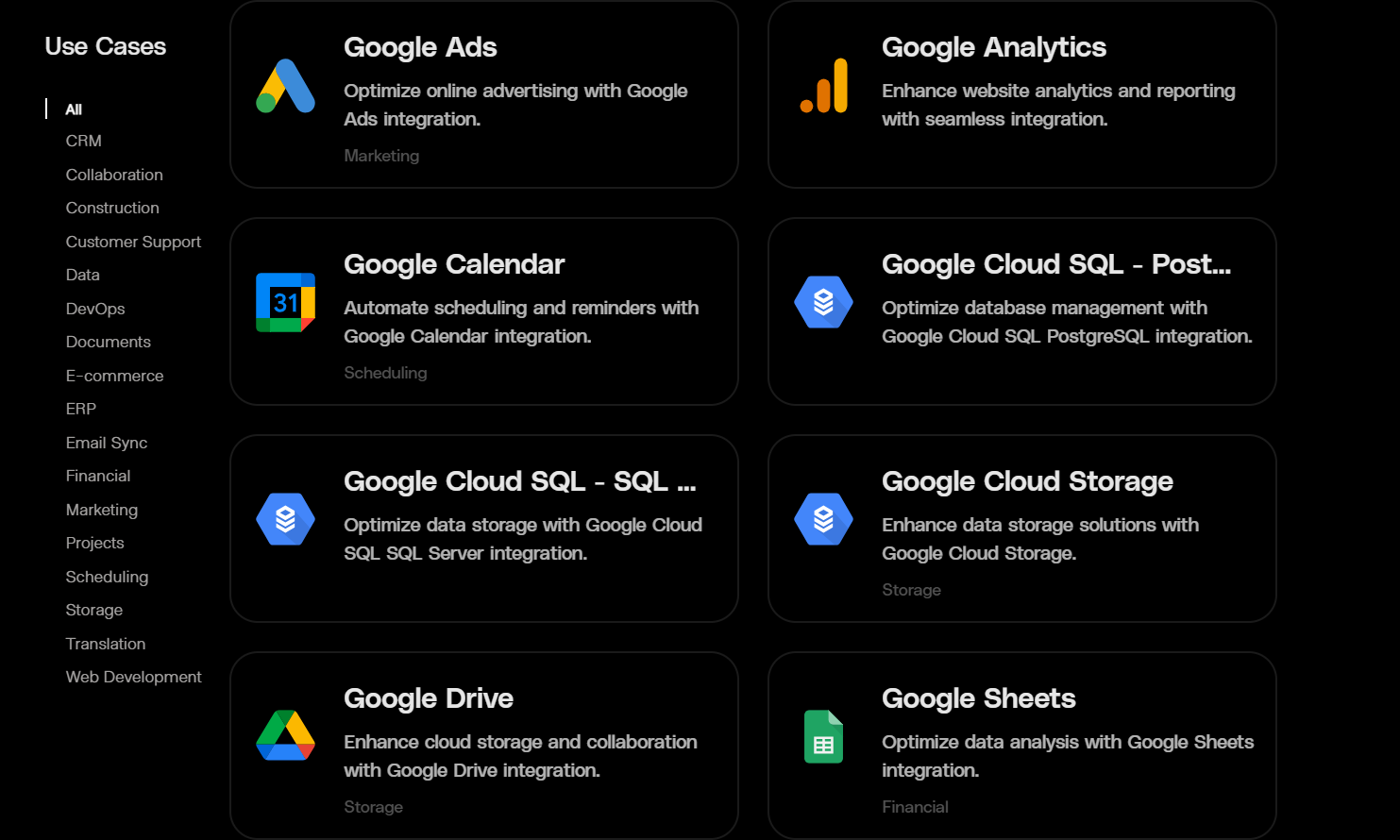

Modern platforms like Datagrid provide pre-built connectors to over 100 data sources, enabling faster deployment of report automation agents.

The right platform eliminates infrastructure complexity, so your team can focus on generating insights rather than troubleshooting APIs.

Step #3: Connect Data Sources and Build Templates

Choosing a platform solves the infrastructure problem. Now you need to wire your data sources together and design the final report.

Start with your highest-volume data source. For project managers, that's usually your PM tool, like Asana or Procore, where task status lives. For customer success teams, it's your CRM where account metrics accumulate.

Connect this primary source first, validate the data flows correctly, then layer in secondary sources. Each connection needs three configuration steps. First, authenticate access using API keys or OAuth credentials. Second, map fields from source systems to your report schema.

Budget actuals from your accounting software need to align with budget targets from your PM tool, even when they use different field names—third, set sync frequency. Hourly syncs work for operational reports, while daily refreshes suffice for executive summaries.

With data flowing, build your report template. Define the structure: executive summary at the top, detailed metrics in the middle, exceptions and action items at the bottom. Then specify aggregation rules for each section.

Weekly status reports might summarize completed tasks by team member, calculate the percentage complete by project phase, and flag any tasks that are more than 2 days behind schedule.

Include conditional formatting that highlights what needs attention. Customer health scores below 70 display in red. Budget variances exceeding 10% trigger automatic escalation. Risk ratings above "medium" pull in the full risk description instead of just the summary.

Test the template with last week's data before going live. Pull the same metrics you compiled manually and compare outputs side by side. Discrepancies usually trace back to field-mapping issues or to calculation logic that doesn't account for edge cases.

Most platforms, like Datagrid, let you preview reports before scheduling. Use this to verify formatting renders correctly and data populates all sections as expected.

Step #4: Extract Insights from Unstructured Documents

Structured data from CRMs and project tools tells half the story. The other half sits in contracts, support tickets, email threads, and project documentation.

Think about what actually goes into your reports. Project status updates need risk summaries pulled from RFP documents, compliance updates from regulatory filings, and contractor feedback buried in email threads.

Customer health reports require sentiment analysis from support tickets and renewal terms from signed contracts. If your platform only connects to structured databases, you're still reading documents manually to fill these gaps.

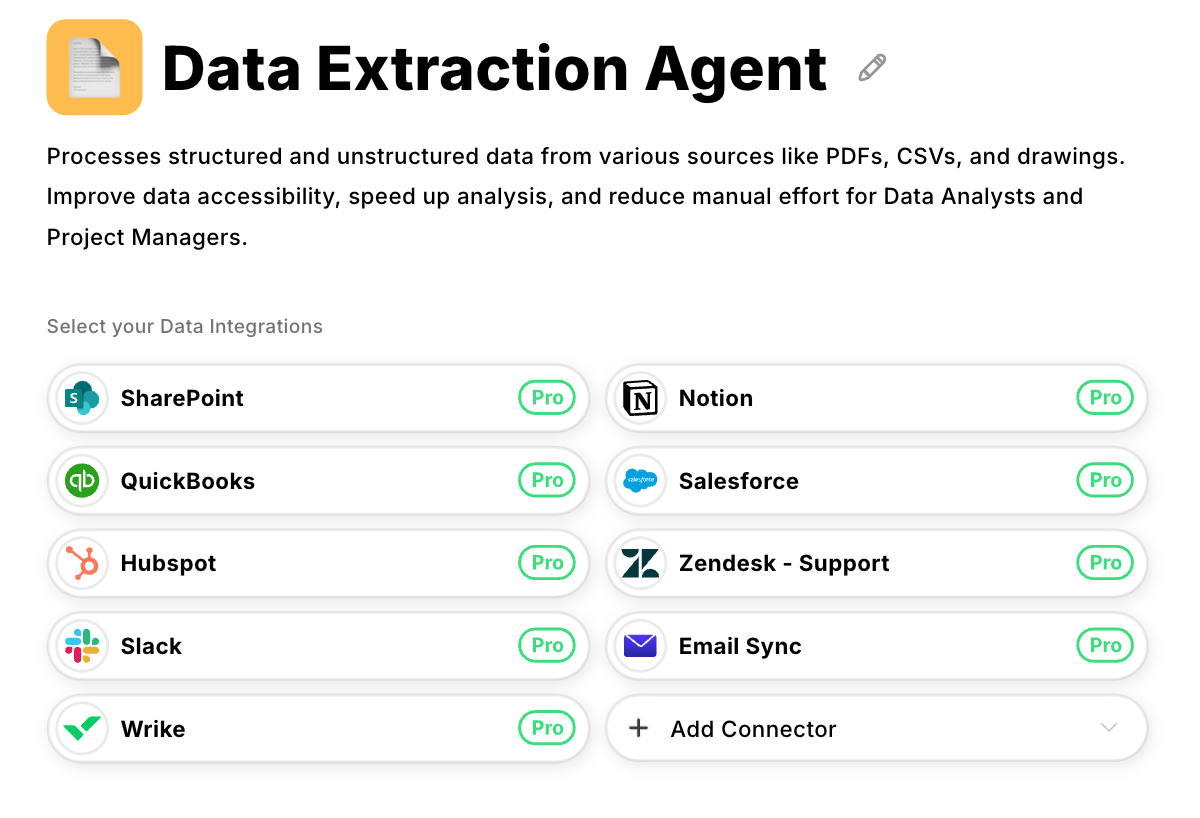

Document processing agents handle the unstructured side. One agent pulls task completion metrics from Asana while another scans last week's project documentation for risk indicators. A third analyzes support ticket sentiment.

All outputs flow into your report template simultaneously, eliminating the need for sequential manual review.

The agents adapt to the needs of your reports. Project managers get risk factors extracted from RFPs, scope changes flagged in change orders, and communication issues surfaced from contractor correspondence.

Customer success teams get sentiment scores from ticket histories, renewal terms pulled from contracts, and feature requests identified across touchpoints.

Set up processing rules that match your workflow. When NPS scores drop or churn indicators spike, agents automatically analyze recent customer communications for root causes. When project timelines slip, agents extract relevant updates from meeting notes and email threads to explain delays.

Datagrid's specialized agents excel at this type of cross-document analysis, processing entire document repositories to surface insights that would take hours to compile manually. Test this capability by reviewing last month's reports.

Find three places where you manually read documents to gather stakeholder information, then configure agents to extract that same information automatically and compare the results.

Step #5: Automate Generation and Distribution

With templates built and data connections tested, you're ready to eliminate manual compilation entirely. The automation occurs in two parts: generating reports and delivering them to stakeholders.

Start by defining generation triggers. Most reports run on fixed schedules based on when stakeholders need the information. Weekly status reports are generated every Friday at 4 p.m., monthly scorecards are issued on the first business day, and quarterly assessments are issued two days before board meetings.

Some reports work better with event-based triggers. Customer churn risk reports should be generated immediately when health scores drop below 70.

Budget variance reports trigger when actual spend exceeds planned by more than 10%. Project delay notifications fire the moment a critical path task falls two days behind.

Once generation timing is set, configure distribution workflows. Project executives might receive a one-page PDF summary via email. Finance teams need the full dataset exported to Google Sheets. Operations managers want Slack notifications with dashboard links.

Configure multiple output formats from the same data source rather than building separate reports for each audience.

The final piece connects reports to your communication tools. Platforms like Datagrid can post report summaries directly to team Slack channels, attach full reports to scheduled emails, and update dashboard links in project management tools.

This means stakeholders receive information in the tools they already monitor.

Before going live, test the full workflow end-to-end. Generate a test report, verify it reaches the right people in the right format, and confirm links work as expected.

Step #6: Monitor Quality and Refine Output

No automated report delivers perfect accuracy from day one, so build validation into your workflow immediately.

Pull a sample of automated reports weekly and compare them against manual compilation. For most teams, reviewing 10-15% of generated reports catches systematic issues early.

Track discrepancies in a simple spreadsheet: field mismatches, calculation errors, missing data points, formatting breaks. This reveals where your configuration needs adjustment.

Oversight intensity should match report importance. Low-stakes operational dashboards might need monthly spot checks. High-visibility executive summaries should be reviewed before every distribution until you've built confidence in the automation.

Customer-facing reports, such as account health scorecards, should undergo human review, as errors can damage client relationships.

As errors surface, document the root cause. Most issues trace back to three sources: incorrect field mapping between systems, edge cases your aggregation logic didn't account for, or source data quality problems that existed before automation.

Fix field mapping in your platform configuration. Update calculation rules to handle edge cases. Flag source data issues with system owners.

Stakeholder feedback catches what internal validation misses. Set up a simple mechanism that allows report recipients to flag concerns directly. A "Report an issue" link at the bottom of each report opens a quick form that captures problems in real time.

Questions about specific numbers reveal a need for more precise documentation of the calculation logic. Requests for additional fields reveal where your template doesn't align with actual decision-making needs.

Refine continuously in small increments. Add one requested field per week. Adjust one calculation threshold based on feedback. Update one distribution timing based on when stakeholders actually read reports.

Step #7: Measure Impact and Scale

Sustained support for automated reporting comes from numbers. Frame your baseline first: average compilation time per report, distribution delays, and error rates in your manual process. Capture the same metrics after deployment so stakeholders see real improvement.

Track three core KPIs: time saved per report cycle, data accuracy rates, and stakeholder satisfaction scores. A simple dashboard monitoring these weekly keeps performance transparent.

Calculate time savings by multiplying hours eliminated per report by generation frequency. A weekly report that dropped from 3 hours to 15 minutes saves 2.75 hours per week, or 143 hours per year.

Turn these metrics into internal case studies. "Customer health reports now complete 48 hours faster" connects numbers to business problems. "Project managers recovered 8 hours weekly for strategic work" shows tangible team impact.

These stories surface new automation candidates across departments faster than formal requests.

With proven results, expand systematically. Identify the following highest-impact report from your original prioritization and apply the same workflow. High-volume, low-complexity reports scale fastest since they reuse existing data connections and template patterns.

Calculate ROI for budget conversations: (baseline labor cost – automation cost) ÷ automation cost.

When the ratio consistently exceeds 2:1, securing budget for additional automation becomes straightforward. Most teams hit this threshold within the first quarter after automating three or more report types.

Automate Your Report Writing with Agentic AI

Three hours every week digging through Salesforce, Zendesk, and project management tools to compile one report. Data that's stale by the time stakeholders read it.

Document analysis that can't scale without adding headcount. Most teams accept this as the cost of reporting, but it doesn't have to be.

- Connect to 100+ data sources without custom integration: Access Salesforce, Asana, Zendesk, Google Sheets, and more via pre-built connectors. Work that usually takes your team weeks of API development can be done through configuration in hours.

- Build report templates that adapt automatically: Create conditional formatting, threshold triggers, and calculation rules once. Apply them across multiple report types without having to rebuild the logic for each variation.

- Process documents alongside structured data: Extract insights from contracts, support tickets, and project files while pulling metrics from your databases. Reports that required 3 hours of manual document review now generate in minutes.

- Automatically distribute to stakeholders: Configure scheduled delivery, event-based triggers, and multi-format outputs via visual workflows. Changes that required developer time now take configuration adjustments.

- Scale from one report to dozens without adding headcount: Teams automating their first weekly report can handle 20+ report types because template patterns and data connections are reusable.

Ready to eliminate manual compilation from your reporting workflow?