Data fragmentation blocks multi-agent orchestration. Learn why traditional integration fails and how unified data access eliminates custom connector overhead.

Your agents may excel individually, but they expose a bigger problem: manual connector development consumes most of your capacity. While provisioning data access for three agents touching Salesforce, Slack, and SharePoint, five more workflow requests stack up.

Fragmented data creates a bottleneck; agents can't share context when each needs custom connections to every source.

Before you can move faster, you need to understand what’s really slowing you down.

This guide explains what orchestration is, why data fragmentation blocks coordination, best practices for scaling, and how unified platforms solve integration overhead.

What is AI Agent Orchestration?

AI agent orchestration coordinates multiple autonomous agents toward unified business outcomes.

Customer support tickets get processed by one agent, enriched by another, and routed by a third, but each starts from scratch because none can access the previous agent's work.

Instead of agents working in isolation, orchestration provides shared data access and centralized coordination. The payoff: disconnected automation becomes intelligent business processes.

Consider customer support where orchestrated agents work on complete information: ticket classification pulls customer history, response generation accesses previous interactions, and escalation routing considers account value.

Your support team handles nuanced escalations while agents manage routine coordination—all because they share context.

This pattern extends across operations. Supply chain teams watch inventory agents coordinate with logistics in real time, catching issues before they cascade. Finance departments automate reporting where extraction agents feed analysis agents that generate compliance documentation.

3 Types of Multi-Agent Orchestration: Sequential, parallel, and hierarchical

There are three main patterns for coordinating multiple agents: sequential, parallel, and hierarchical. The right pattern makes your workflow feel effortless. The wrong one turns every task into a puzzle.

Understanding these patterns helps you design orchestration that scales, but more importantly, it reveals why the same infrastructure challenge breaks all three approaches.

- Sequential execution flows agents through connected steps where each builds on previous work. Contract processing moves from extraction to review to archival. Claims processing follows similar logic: intake captures documentation, assessment evaluates coverage, and approval authorizes payments. Break any link, and the workflow stalls.

- Parallel processing unleashes multiple agents on different subtasks simultaneously. Market research agents gather competitive intelligence while product agents analyze internal performance, both feeding strategy reviews. Financial auditing deploys compliance agents reviewing different frameworks concurrently, then consolidates findings.

- Hierarchical coordination uses supervisor agents to manage specialists, reallocating work as conditions shift. Document processing supervisors route contracts to legal agents, technical specs to engineering agents, and financial terms to accounting agents. When requirements change mid-process, supervisors adjust assignments without human intervention.

Each pattern scales differently, but all share one critical dependency: reliable information sharing between agents. Break that, and coordination collapses.

Sequential patterns work when each step depends on the previous agent's output. Parallel patterns fit when independent subtasks can run simultaneously and consolidate later.

Hierarchical patterns handle dynamic workflows where task allocation needs to shift based on changing conditions.

Orchestration vs Automation

Automation executes predefined tasks identically every time—scan this document, update that record, send scheduled notifications. Valuable for repetitive operations following fixed rules.

Orchestration makes decisions about which tasks to execute, in what sequence, and how agents should adapt when inputs change. Instead of three separate scripts running on schedule, you deploy three agents that adjust actions based on what others discover.

The practical difference shows up when conditions shift. A research agent identifies new regulatory requirements during analysis.

Orchestrated approval agents automatically modify their criteria in response. Automated systems keep following old rules until someone manually updates configurations. This sophistication determines what you can accomplish. Automation works within single systems on scope-limited tasks.

Orchestration manages workflows spanning departments and platforms, adapting as conditions evolve, delivering cross-system visibility, adaptive decision-making, and end-to-end process ownership.

But that sophistication demands better architecture. Master the orchestration basics, and you'll quickly hit the real blocker: fragmented information preventing agents from accessing the context they need for coordination. That's where most projects stall.

Why Multi-Agent Orchestration Fails

When you wire up a few agents in a lab, everything hums. Move the same workflow into production and the wheels fall off—tickets stall, reports contradict each other, and leadership wonders why "AI" suddenly looks like a spreadsheet with extra steps.

The root cause almost always traces back to the information those agents share, or rather, don't share.

Data fragmentation creates four predictable failure modes. Agents operate like smart employees trapped in different buildings with no phone lines:

- Incomplete context means an agent drafting a customer reply can't see open invoices, so you ship a friendly discount to someone who still owes money.

- Redundant work emerges when two research agents hit the same source because neither can see the other's progress, doubling API costs and latency.

- State conflicts arise as parallel agents update inventory at different times—one thinks an item is in stock while another has already reserved it.

- Brittle handoffs break sequential pipelines when Agent B can't parse Agent A's output format, forcing human intervention at the worst possible moment.

These fractures slow decision-making and poison model accuracy across your workflows. Healthcare shows this clearly: fragmented patient records force clinicians to repeat tests and inflate costs.

AI diagnostic agents can't see complete patient histories, blocking the insights they're designed to deliver.

Aggregation rules that stitch information together offer temporary relief, but each rule is a patch, not a foundation. The underlying fragmentation remains.

Why Traditional Fixes Fail

The fragmentation problems seem obvious once you spot them. Most teams reach for familiar integration fixes to bridge the gaps—but those approaches can't solve coordination at scale.

Point-to-point connectors feel quick for two agents, three sources. But connection math grows quadratically: ten agents each touching ten sources explodes into 100 separate links, every one a potential outage.

ETL pipelines collect all your data in one place. But by the time your agents get it, they're making decisions based on yesterday's news. When you need to react in real-time, a batch schedule that runs overnight becomes a blind spot.

Middleware platforms promise universal adapters, plug in once, connect to everything. But you still end up writing custom mapping logic for each integration. Worse, you've now added an extra hop between your systems. That latency adds up fast when you're trying to coordinate actions in real-time.

The scaling trap catches you regardless of approach: project time gets consumed building connectors instead of refining orchestration logic, and every new requirement resets the clock. Delays erode stakeholder trust faster than any model performance issue.

These methods aren't inherently wrong—they're built for automation, not orchestration. Automation tolerates stale information and loose coupling.

Multi-agent systems demand shared, current context—something point solutions can't deliver when you're coordinating dozens of agents across enterprise systems. What works for three agents breaks at thirty.

5 Best Practices for Building Multi-Agent Orchestration Systems

Coordinating a handful of agents in a proof-of-concept is one thing; running a production system that dozens of teams rely on is another. These practices keep real-world orchestration from collapsing under its own weight.

Map Data Sources Before Choosing Orchestration Patterns

Start by cataloging every system your agents will touch: CRM, ERP, ticketing, analytics, even the one spreadsheet finance still updates at midnight. This inventory work feels tedious upfront, but it prevents the integration tax that blindsides teams once workflows go live.

Knowing where customer history, billing records, and product logs actually live lets you pick sequential, parallel, or hierarchical patterns that won't starve agents of context later.

Without this map, you'll design elegant coordination logic only to discover three agents need overlapping access to systems you haven't connected yet.

Document the output format, refresh cadence, and ownership for each source so new agents can plug in without surprise rework. Sales teams processing 500 leads daily need different data flows than support agents handling 50 tickets, different volume, different latency requirements, different failure modes.

Map these requirements before writing orchestration logic. Discovering gaps after your first production deployment means retrofitting data access into the orchestration you've already built.

Monitor Data Flows and Agent Performance Together

Most orchestration outages begin as silent issues—an API rate limit hit at 2 am, an expired token nobody noticed, a ten-second spike in query latency that compounds across sequential agents. By the time you see agents making bad decisions, the root cause has happened hours earlier in the data layer.

Treating pipelines and agent reasoning as a single telemetry surface catches problems before they cascade. Log when an agent calls a source, how much information it pulls, and how long the call takes. Wire alerts that fire if latency doubles or payloads shrink unexpectedly.

Here's what this looks like in practice: customer support agents suddenly can't access billing history. They'll generate confused responses. Technically, the agent is "working," but it's operating on incomplete information.

Traditional monitoring shows healthy agent uptime while missing the data access failure causing poor outputs. You troubleshoot the symptom (bad responses) instead of the cause (integration failure).

Build observability into both layers from the start, and you'll know within minutes whether poor agent decisions stem from the model or from the information feeding it.

Separate Orchestration Logic From Data Retrieval Code

Mixing these concerns means every new datasource becomes a full refactor. A support agent that embeds Zendesk queries directly in its escalation logic can't switch ticketing systems without rewriting its core decision-making code.

Build thin agents that request information through a shared access layer instead. This architectural separation lets you rewrite orchestration rules: say, switching from sequential to hierarchical execution, without touching credentials or query syntax.

When your CRM integration changes because the vendor updates their API, you'll update one access layer instead of hunting through agent code scattered across different teams. That's a two-day fix instead of a two-week emergency touching your entire agent ecosystem.

Build Architecture that Works for Both 3 Agents and 20 Agents

Three agents coordinating across five data sources might work fine with basic error handling and manual monitoring. Scale that to twenty agents across fifteen sources, and you'll hit collapse without proper resource management and throttling.

Run cost projections early. Estimate bandwidth, storage, and licensing expenses at 5× your current volume before the first deployment review.

An agent registry that declares resources upfront, expected query frequency, typical payload size, and peak load patterns let’s the orchestrator enforce quotas. This prevents a single rogue agent from exhausting GPUs or API budgets while starving others.

Push those projections further. Document processing that handles 100 contracts daily might collapse at 1,000 without proper queueing or backpressure built in from day one.

Plan for scale early, and deploying new agents becomes a configuration update. Skip this step, and you'll spend months re-architecting orchestration under production pressure while business operations depend on uptime.

Implement Security and Governance Controls From Day One

Each agent operates like an insider with perfect recall and access to systems most employees can't touch. Least-privilege access, immutable audit logs, and role-based permissions must ship in version 1, not added later after a security review flags gaps.

Auditability matters because compliance teams need to reconstruct what an agent did and why it did it months after the fact. Log every prompt, decision, and external call; store them in a tamper-evident ledger.

When agents process customer data, financial records, or regulated information, "we'll add logging later" doesn't fly with enterprise security reviews.

Getting governance right early changes how scaling feels. Moving from three agents to thirty becomes configuration work instead of risk multiplication. Security reviews that drag for weeks with retrofitted systems wrap up in days when controls are designed into the architecture from the start.

Datagrid Helps You Coordinate Agents without Custom Integration

The fragmentation problems we've covered, incomplete context, redundant work, and brittle handoffs, all trace back to the same architectural gap.

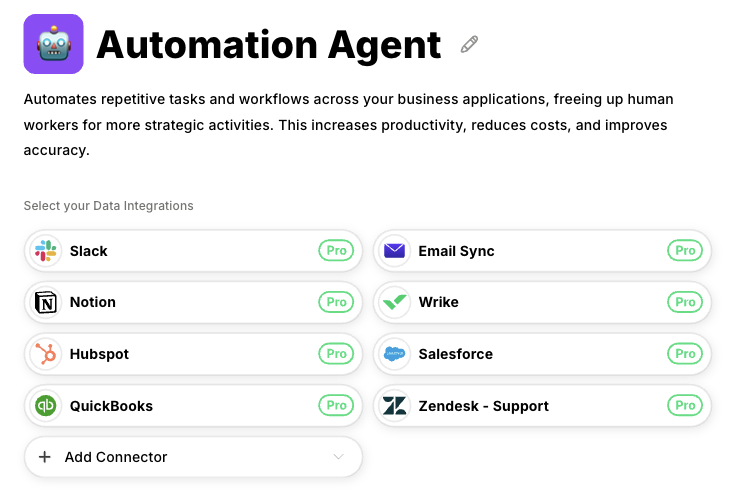

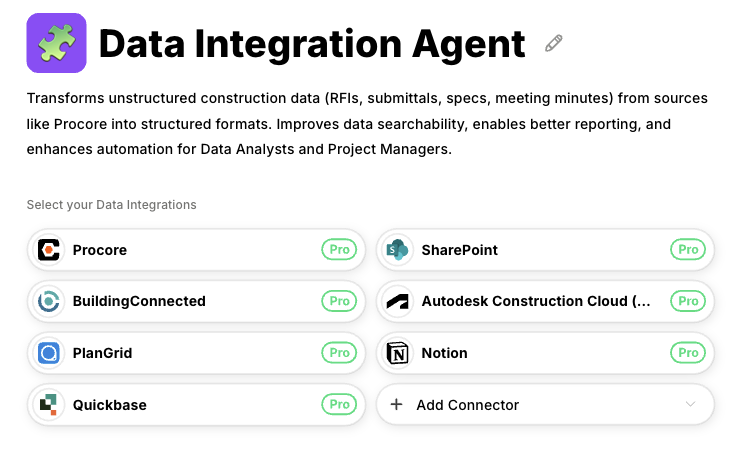

Agents can't coordinate effectively when each needs custom connectors to every data source they touch. Datagrid solves this by creating AI agents with built-in access to 100+ data sources through a unified platform.

Instead of building separate integrations for each agent, Datagrid maintains connections at the platform level. Your agents get access without custom connector development, eliminating the integration overhead that consumes most orchestration projects.

Datagrid's grid of specialized agents work together across sequential, parallel, and hierarchical patterns:

- Document-heavy orchestration: Process RFPs and compliance docs across storage systems in days instead of months.

- Cross-departmental coordination: Agents work across Sales, Customer Success, and Operations without departmental silos.

- Enterprise security: Platform-level authentication and audit trails eliminate separate security for every integration.

As a result, Datagrid eliminates custom connector development. Your agents can coordinate across enterprise systems immediately.