Compare DeepSeek vs ChatGPT for AI agent architects: cost structure, self-hosting, code generation, enterprise support, and performance trade-offs to make the best choice.

You're building an AI agent system to process hundreds of RFPs monthly, generate integration code for multiple data sources, and orchestrate complex multi-step workflows.

The model you choose determines your system's accuracy, speed, cost efficiency—and whether you control your infrastructure or depend on a vendor.

ChatGPT and DeepSeek represent fundamentally different approaches to developing AI agents. ChatGPT offers enterprise-grade reliability, multimodal capabilities, and turnkey deployment through OpenAI's managed service.

DeepSeek provides open-weight models you can self-host, customize completely, and run at 98% lower cost—with trade-offs in features and support.

This comparison examines both across the criteria that matter most: cost structure, code generation quality, reasoning capabilities, deployment flexibility, enterprise support, document processing, tool selection, context management, and long-term viability.

ChatGPT vs DeepSeek: Feature Comparison

How these models actually perform in production determines whether your agents meet accuracy requirements, stay within budget, and run on infrastructure you control.

This comparison examines both models across the criteria that matter for AI agent architects evaluating open-source alternatives to proprietary services.

Overview

ChatGPT and DeepSeek represent fundamentally different approaches to deploying large language models in production agent systems—one prioritizing managed infrastructure and enterprise features, the other emphasizing open access and deployment control.

ChatGPT, produced by OpenAI, has become the dominant proprietary model for AI architects building agent systems that process documents, generate code, and orchestrate workflows.

Its managed API infrastructure handles scaling, updates, and optimizations automatically, allowing teams to focus on agent logic rather than model operations. Understanding its evolution and current capabilities helps you determine if premium features and hands-off infrastructure justify the cost for your agent deployments.

DeepSeek, developed by DeepSeek AI Research in Hangzhou, China, offers an open-weight alternative that eliminates vendor lock-in and licensing constraints.

For AI architects evaluating alternatives to proprietary models, DeepSeek enables unrestricted commercial use, complete customization through fine-tuning, and on-premise hosting that keeps sensitive data within your infrastructure. You control model versioning, deployment environments, and can modify architectures to match specific workflow requirements.

The choice between these models often comes down to three factors: whether you need managed infrastructure or deployment flexibility, how your data governance policies handle external API calls, and whether licensing costs or operational overhead creates the bigger constraint for your agent systems.

Model Evolution

OpenAI's ChatGPT has evolved through multiple generations to meet the requirements of enterprise agents. Each release targeted specific limitations in reasoning, cost efficiency, and deployment flexibility.

- GPT-3.5 (2022) delivered strong conversational abilities at accessible price points. However, it struggled with complex reasoning—a critical gap that limited production agent reliability. Systems frequently failed when handling multi-step workflows.

- GPT-4 (2024-2025) marked a substantial leap in reasoning capabilities. It reduced hallucinations that plagued earlier versions. Specialized variants emerged for different deployment needs: GPT-4o prioritizes faster response times, GPT-4 Turbo extends context to 128K tokens for document-heavy workflows. GPT-4.1 achieves the highest action completion rate in testing at 0.620 with 0.800 tool selection quality.

- GPT-5 (August 2025) introduced a unified architecture with automatic routing. It switches between fast processing for simple tasks and deep reasoning for complex workflows. Three variants address different needs: regular for maximum capability, mini for balanced performance and cost, and nano for high-volume simple tasks where speed outweighs sophistication.

DeepSeek has differentiated itself by prioritizing cost efficiency and open access. It proves that architectural innovation can deliver competitive agent performance at dramatically lower infrastructure costs.

- DeepSeek-V3 demonstrates radical cost efficiency with 671 billion total parameters. Its sparse-attention architecture was trained for approximately $6 million versus GPT-4's estimated $100+ million. It cuts production compute costs by roughly 65% while achieving 0.400 action completion and 0.800 tool selection quality in testing.

- DeepSeek-V3.2-Exp (September 2025) delivered a 50% price reduction alongside improved reasoning and coding capabilities. It maintains 128K token context windows that handle roughly 100 pages of documentation. This matches enterprise requirements for document-intensive agent workflows at a fraction of proprietary model costs.

- Four specialized variants address specific agent workloads: Chat for conversational interfaces, Reasoner for analytical tasks, Coder for development workflows, and R1 for research and logic-intensive operations. This allows architects to optimize both performance and cost by matching model capabilities to specific requirements.

The DeepSeek Chat app became #1 on Apple's App Store in early 2025.

Key Features

GPT-5 and DeepSeek took opposite approaches to solving core agent system challenges—OpenAI prioritizing comprehensive capabilities and automatic optimization, DeepSeek focusing on efficiency and deployment control.

Context and Reasoning Capabilities

GPT-5:

- Context window exceeds 400,000 tokens (roughly 300+ pages per request)

- Built-in chain-of-thought breaks complex problems into logical steps

- Enhanced memory maintains context across conversations

- Automatic routing switches between fast and reasoning modes based on query complexity

DeepSeek:

- Context window reaches 128,000 tokens (roughly 100 pages per request)

- Mixture-of-Experts architecture activates only necessary parameters per query

- Reduces compute costs by 65% versus dense LLMs

- 671 billion total parameters with 37 billion active per token

Model Variants and Flexibility

GPT-5:

- Three variants: regular (maximum capability), mini (balanced), nano (high-volume simple tasks)

- Native multimodal support handles text, images, audio, and video in one API call

- Strong performance across coding, analysis, writing, and decision-making

DeepSeek:

- Four specialized variants: Chat, Reasoner, Coder, R1 (research/logic)

- Text-only processing without multimodal features

- Match model capabilities precisely to specific requirements

Deployment Models

GPT-5:

- Managed API infrastructure only

- OpenAI handles scaling, updates, and optimization

- No self-hosting options

DeepSeek:

- Complete MIT License freedom for commercial and on-premise deployment

- Download weights from Hugging Face or GitHub

- Customize and run on your infrastructure without vendor lock-in

- Free access via deep-seek.ai and chat-deep.ai for testing

- No per-user licensing fees or subscription requirements

Integration and Performance

Both models:

- Support asynchronous requests, streaming responses, and batch processing

- OpenAI-compatible API (DeepSeek works as a drop-in replacement)

DeepSeek specific:

- Cache hit optimization reduces costs by up to 90% for repeated queries

- Minimal code changes are required when switching from ChatGPT

The MIT License makes DeepSeek the only viable option when external API calls violate security policies or when per-token costs become prohibitive at scale.

API Access & Pricing

OpenAI and DeepSeek offer dramatically different pricing models—OpenAI charges premium rates for managed infrastructure, while DeepSeek provides budget-friendly API access and free self-hosting options.

Setup and Access

GPT-5:

- Create OpenAI account, add billing information, and start making API calls

- Platform includes documentation, SDKs for major programming languages

- Access through developer API or standard ChatGPT subscription plans

DeepSeek:

- Create free account on DeepSeek Open Platform at api-docs.deepseek.com

- Generate API key and authenticate with standard HTTP headers

- OpenAI-compatible API works as drop-in replacement for existing ChatGPT integrations

Pricing Structure (as of October 2025)

GPT-5:

- Flagship models (GPT-5, o1): ~$15 per million input tokens, ~$60 per million output tokens

- Mini variants: ~$0.15 per million input tokens, ~$0.60 per million output tokens

- Nano variants: lower pricing that varies based on usage volume

- Enterprise pricing available with volume discounts and dedicated support

DeepSeek (after September 2025 50% reduction):

- DeepSeek-Chat (V3.2-Exp): ~$0.28 per million input tokens (cache miss) or ~$0.028 (cache hit), ~$0.42 per million output tokens

- DeepSeek-Reasoner: ~$0.56 per million input tokens (cache miss) or ~$0.07 (cache hit), ~$1.68 per million output tokens

- Self-hosted deployment: free using open weights—pay only your own compute costs

- No per-user subscription fees

Cost Optimization

GPT-5:

- Detailed visibility into token consumption across models and use cases

- Set up cost alerts and track usage patterns

- Optimize implementation based on actual usage data

DeepSeek:

- Cache hit optimization reduces costs by up to 90% for repeated queries

- Significantly more economical at scale, especially with cache hits

- Detailed dashboards for tracking usage when using the hosted API

Deployment Options

GPT-5:

- Managed API only—OpenAI handles all infrastructure

- No self-hosting available

DeepSeek:

- Hosted API through DeepSeek Open Platform

- Self-hosted deployment using vLLM, SGLang, or other inference frameworks

- Complete control over performance optimization, data privacy, and operational costs

- Download open weights and deploy on your own infrastructure

DeepSeek's pricing becomes significantly more economical at scale—roughly 95%+ cost reduction compared to GPT-5 when leveraging cache hits and self-hosting options.

ChatGPT vs DeepSeek: Performance Comparison for AI Agent Development

How these models perform in production determines whether your agents meet accuracy requirements, stay within budget, and justify the complexity of self-hosting or the premium costs of a managed service.

This comparison examines both models across the criteria that matter for AI agent architects choosing between open-source flexibility and enterprise-grade infrastructure.

Criteria #1: Cost Structure and Scaling Economics

Your agents' operational costs determine whether your system stays viable as usage grows—the model you choose affects expenses, budget, and infrastructure control.

With ChatGPT, you get predictable managed pricing. Flagship models cost $15 per million input tokens and $60 per million output. You're paying premium for managed infrastructure and guaranteed uptime.

With DeepSeek, you get dramatically lower costs. The hosted API costs $0.28 per million input tokens (cache miss) or $0.028 (cache hit), and $0.42 output—98% cheaper for input, 93% cheaper for output. Self-hosting is free.

The practical difference shows up at scale. Running 10 million input tokens and 2 million output tokens daily? ChatGPT costs $270 per day ($8,100 monthly).

DeepSeek's hosted API costs $3.64 per day ($109 monthly)—a 98% reduction. Self-hosting eliminates API costs entirely.

ChatGPT's premium pricing buys you managed infrastructure, SLAs, and enterprise support. DeepSeek's massive savings come with trade-offs: you either manage your own infrastructure or rely on community support.

The choice comes down to operational priorities.

- ChatGPT, when delivered as a service, offers convenience and guaranteed support, justifying its premium pricing.

- DeepSeek when massive cost savings (98%+ reduction) matter more than vendor SLAs.

Criteria #2: Code Generation & Technical Implementation

Your agents need to generate integration code, build API wrappers, and automate technical workflows. The model you choose determines how much time you spend debugging versus shipping features.

With ChatGPT, you get broad language coverage and fast iteration. The model generates code quickly and integrates with established tools like GitHub Copilot and Replit. Building API wrappers or prototyping integrations? ChatGPT moves fast. You go from prompt to working code efficiently.

With DeepSeek, you get specialised coding through DeepSeek-Coder. The model handles multi-file projects and provides debugging assistance with strong performance on code generation benchmarks.

It maintains context across related files and understands dependencies reasonably well for an open-source alternative.

The practical difference shows up in both quality and economics.

Both models handle simple API wrappers without issues. For complex multi-file integrations, ChatGPT produces cleaner code with fewer edge cases. DeepSeek delivers decent quality for 98% less cost.

If you can debug minor issues during development, DeepSeek's economics make sense. If you need polished code with minimal debugging, ChatGPT's premium performance justifies the price.

The choice comes down to quality-versus-cost trade-offs

- ChatGPT for maximum code quality and fast iteration, where budget allows

- DeepSeek is acceptable when acceptable code quality at 98% cost savings outweighs minor quality gaps.

Criteria #3: Tool Selection and API Orchestration

Your agents need to call the right APIs and functions to complete tasks—the model you choose determines how often they mess up.

With ChatGPT, you get broad ecosystem access. The model integrates with extensive third-party plugins and native OpenAI Tools (browser, Python, data visualization).

It handles multiple APIs running simultaneously without breaking. ChatGPT's plugin ecosystem gives you ready-made integrations.

With DeepSeek, you get basic tool selection without the plugin ecosystem. The model calls APIs and executes functions through standard interfaces, but you're building most integrations yourself. DeepSeek handles straightforward tool selection for common use cases.

The practical difference shows up in integration complexity. Standard REST API calls and common functions work fine with both. Complex tool chains requiring reasoning about API relationships and error handling work better with ChatGPT's ecosystem and stronger reasoning.

The choice comes down to orchestration complexity

- ChatGPT for complex tool chains and extensive plugin integrations

- DeepSeek is used when basic API calling suffices and cost savings justify building custom integrations.

Criteria #4: Reasoning and Complex Workflow Planning

Your agents need to handle multi-step logic, decision trees, and workflow orchestration—the model you choose determines whether workflows adapt automatically or require manual configuration.

With ChatGPT, you get automatic routing between speed and reasoning. Build an agent that processes simple customer inquiries one minute and analyzes complex contract terms the next? GPT-5 switches modes automatically.

Simple queries get fast responses. Complex problems trigger step-by-step reasoning without code changes. Your agent handles both workflows through a single API endpoint.

With DeepSeek, you get multiple model variants optimized for different reasoning depths. DeepSeek-Chat handles conversational tasks quickly.

DeepSeek-Reasoner tackles analytical problems. DeepSeek-R1 processes scientific and logical reasoning. Each variant performs well at its specific task. The difference? You're routing queries to the right variant yourself.

Complex RFPs (200 pages, ambiguous specifications, cross-referenced documents)? ChatGPT adapts reasoning depth automatically. DeepSeek needs you to recognize complexity upfront and route to DeepSeek-Reasoner or R1.

The choice comes down to development complexity versus cost:

- ChatGPT works best when automatic adaptation across varied workflows justifies premium pricing.

- DeepSeek works best when predictable workflows or manual routing logic justify 95% cost savings.

Criteria #5: Self-Hosting Requirements vs Managed API

Your agents need reliable infrastructure to run on—the model you choose determines whether you manage that infrastructure yourself or pay a vendor to handle it.

With ChatGPT, you get fully managed infrastructure through OpenAI's cloud service: no servers to provision, no GPU clusters to maintain, no inference optimization to configure.

You make API calls, OpenAI handles uptime, scaling, and performance optimization. The infrastructure is invisible.

This works well when you want to focus on agent logic rather than model deployment. The trade-off is vendor dependency and no control over where your data goes or how models process it.

With DeepSeek, you get complete infrastructure flexibility through open-weight models. You can use the hosted API like ChatGPT, or download the weights and deploy them on your own hardware.

Self-hosting means managing GPU infrastructure (A100s or H100s recommended for production), setting up inference frameworks (vLLM, SGLang), configuring load balancing, and monitoring performance.

This requires infrastructure expertise your team may not have. The advantage is complete control—data never leaves your network, you customize model behavior, and you eliminate per-token costs after initial infrastructure investment.

The practical difference shows up in operational complexity and control:

- Getting agents running today without hiring infrastructure engineers: ChatGPT works immediately—create an account, get an API key, start building agents. No GPU procurement, no inference setup, no performance tuning.

- Keeping sensitive data on your infrastructure for compliance: DeepSeek's open weights let you deploy on your own servers. Client data never touches external APIs: your network, your hardware, your control.

- Scaling to millions of daily requests without ballooning API costs: Self-hosted DeepSeek eliminates per-token fees. After initial GPU investment ($50K-$200 for production infrastructure), you pay only electricity and maintenance. ChatGPT's $15 per million input tokens becomes $150K monthly at 10 billion tokens.

- Avoiding GPU cluster management and infrastructure headaches: ChatGPT handles everything—capacity planning, scaling, uptime, security patches. Your team builds agents, not infrastructure.

The choice comes down to infrastructure priorities: ChatGPT when managed service simplicity and immediate deployment matter more than infrastructure control.

DeepSeek works when data sovereignty, customization, or eliminating recurring API costs justify building and maintaining your own deployment infrastructure.

Criteria #6: Enterprise Support and Production Reliability

Your agents need consistent uptime and support when issues arise—the model you choose determines whether you get guaranteed SLAs or rely on community resources.

With ChatGPT, you get enterprise-grade support. Enterprise plans include dedicated account management, priority support channels, guaranteed response times, and formal SLAs.

When production agents fail, you have a vendor accountable for resolution. OpenAI maintains infrastructure, handles security patches, and provides uptime guarantees.

With DeepSeek, you get community support for the hosted API and complete self-reliance for self-hosted deployments.

No formal SLAs, no dedicated support team, no guaranteed response times. The community provides help through GitHub and forums, but nobody is contractually obligated to fix your problems.

The practical difference shows up when production breaks. ChatGPT downtime means escalating to OpenAI support with SLA-backed commitments. DeepSeek hosted API downtime means waiting for community-level support. Self-hosted DeepSeek issues mean your team troubleshoots alone.

The choice comes down to risk tolerance.

- ChatGPT, when production reliability, guaranteed support, and formal SLAs justify premium pricing

- DeepSeek when cost savings matter more than vendor support or when you have internal expertise to handle reliability yourself.

Criteria #7: Document Processing and Multi-Document Analysis

Your agents need to process documents—RFPs, technical specifications, compliance documentation—and the model you choose determines how much they can handle at once.

With ChatGPT, you get 400,000 tokens of context with GPT-5. That's roughly 300+ pages per session. Your agents parse lengthy knowledge bases, extract insights from multiple PDFs, and generate comprehensive summaries.

Processing happens quickly across massive context windows. If your workflows depend on processing multiple lengthy documents simultaneously or cross-referencing across hundreds of pages, ChatGPT's extended context handles it without chunking.

With DeepSeek, you get 128,000 tokens of context with V3.2 models. That's roughly 100 pages per request. Your agents handle standard RFPs, technical documentation, and compliance files within that range. Processing works well for single-document tasks and moderate multi-document workflows.

The model extracts information accurately from documents that fit within the context window. For documents exceeding 100 pages or complex cross-referencing across multiple lengthy files, you'll need chunking logic and context management strategies.

The practical difference shows up when documents get long. One 80-page RFP? Both handle it without issues.

Five 200-page technical specifications that reference each other? ChatGPT processes them together with full context. DeepSeek needs you to build chunking logic, manage document segments, and handle context assembly.

If multi-document analysis exceeding 100 pages is standard, ChatGPT's extended context eliminates the need for complex infrastructure.

The choice comes down to the complexity of the document.

- ChatGPT, when extended with context for multi-document analysis, justifies premium pricing.

- DeepSeek is used when most documents fit within 100 pages and cost savings justify occasional chunking of work

Criteria #8: Context Management and Long Conversations

Your agents need to maintain state across extended workflows and multi-turn interactions—the model you choose determines how well they remember what happened earlier.

With ChatGPT, you get 400,000 tokens of context plus persistent memory across conversations. Multi-day projects with hundreds of turns work without constantly re-providing background information. The model maintains continuity across sessions automatically.

With DeepSeek, you get 128,000 tokens of context without persistent memory. Each new session starts fresh, you're providing context again.

For extended workflows across multiple sessions, you'll need to implement your own state management. Within a single session, DeepSeek maintains adequate context for workflows under 100 pages.

The practical difference shows up when workflows span multiple sessions.

Both models handle standard agent sessions that start and finish in one go. But multi-day projects work differently.

ChatGPT remembers everything across sessions—you pick up where you left off without rebuilding context. DeepSeek forgets between sessions. You're manually saving and reloading context every time.

Single-session workflows under 100 pages work fine with DeepSeek. Multi-day workflows needing persistent state work better with ChatGPT's automatic memory, eliminating manual context management.

The choice comes down to workflow continuity.

- ChatGPT, when persistent memory and extended context across sessions justify premium pricing

- DeepSeek, when single-session workflows fit within 128K tokens and cost savings justify building state management

Questions to Ask Before You Decide

Before choosing between DeepSeek and ChatGPT for your AI agent systems, ask these questions:

- "Can we accept potential performance gaps for 95%+ cost savings?"

DeepSeek costs $0.28 per million input tokens, compared to ChatGPT's $15. The savings are massive, but capabilities differ. - "Do we have infrastructure expertise to self-host models?"

Self-hosting requires GPU management, inference optimization, and monitoring. ChatGPT handles this for you. - "How critical are multimodal capabilities for our agents?"

ChatGPT processes text, images, audio, and video. DeepSeek handles text only. - "Do data sovereignty and on-premise requirements matter?"

DeepSeek's open weights let you keep data on your infrastructure. ChatGPT processes everything in OpenAI's cloud. - "What happens when production agents fail?"

ChatGPT provides enterprise SLAs and support. DeepSeek relies on community help or your own team. - "Can our workflows handle 128K token limits?"

DeepSeek offers 128K tokens (≈100 pages). ChatGPT provides 400K tokens (≈300 pages). - "Do we need vendor-managed infrastructure or prefer building our own?" ChatGPT is a turnkey managed service. DeepSeek requires infrastructure work to realize its full benefits.

- "Is eliminating recurring API costs worth infrastructure investment?"

Self-hosted DeepSeek removes per-token fees after infrastructure is built. - "How important is model customization and fine-tuning?"

DeepSeek's MIT License allows complete modification. ChatGPT's proprietary model restricts customization.

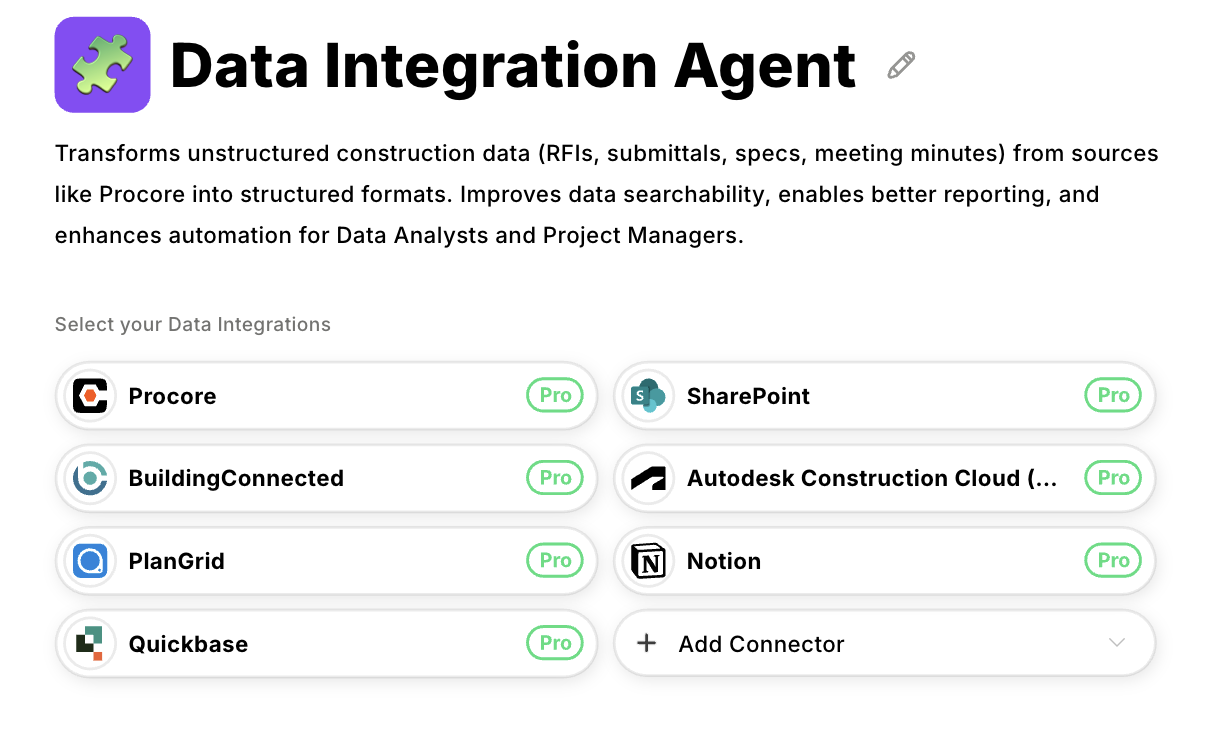

Datagrid Eliminates Infrastructure Work for ChatGPT and DeepSeek Agents

You've chosen your model. Now comes infrastructure work: connecting agents to Salesforce, HubSpot, Google Drive, and dozens of other platforms via custom connectors, and maintaining those integrations as APIs change.

Your tenth agent needs fifteen integrations. Maintenance becomes resource-intensive whether you're paying $15 per million tokens or $0.28 per million tokens.

Datagrid provides pre-built AI agents with unified data access across 100+ enterprise platforms. Instead of building custom connectors, you configure connections through a single interface.

When APIs change, Datagrid maintains the integrations while your team focuses on agent logic—whether you're running DeepSeek on your infrastructure or using ChatGPT's managed service.

- Unified data access: Connect agents to Salesforce, HubSpot, Google Drive, Slack, and 100+ platforms without building custom integrations. Authentication, rate limiting, and data transformation happen automatically in the background.

- Pre-built agent capabilities: Deploy specialized agents for document processing, RFP analysis, and workflow automation immediately. Datagrid's agents handle infrastructure while you customize intelligence for your specific use cases.

- Managed orchestration: Build complex multi-step workflows without managing state, error handling, and retry logic yourself. The platform handles orchestration while you define business logic.

- Scalable architecture: Add new agents and data sources without exponentially increasing maintenance burden. Datagrid manages integration health across your entire agent ecosystem.

Your model choice—ChatGPT or DeepSeek—determines agent capability and cost structure. Datagrid determines whether you're building infrastructure or building intelligence.