Compare ChatGPT vs Claude for AI agent architects: document processing, code generation, tool selection, reasoning, and costs to make the best choice.

You're building an AI agent system to process hundreds of RFPs monthly, generate integration code for multiple data sources, and orchestrate complex multi-step workflows. The model you choose determines your system's accuracy, speed, and cost efficiency.

ChatGPT and Claude are the two dominant models AI architects use for building production agent systems.

This comparison examines both across the criteria that matter most: task completion rates, tool selection precision, document processing capabilities, code generation quality, API reliability, cost structure, and integration requirements.

This guide provides the technical details and practical insights you need to make an informed decision.

ChatGPT vs Claude: Feature Comparison

Beyond API performance, ChatGPT and Claude differ in their feature sets—ChatGPT includes deep research, persistent memory, image generation, and voice interaction that Claude doesn't offer.

Overview

ChatGPT, produced by OpenAI, has become the go-to large language model for AI architects building agent systems. Understanding its evolution and current capabilities helps you determine if it fits your production requirements.

Claude, produced by Anthropic, has rapidly become a top choice for AI architects who need strong reasoning capabilities and extended context handling. Understanding how the model evolved helps you evaluate if it matches your production needs.

Model Evolution

Each ChatGPT release addressed specific limitations of previous versions while expanding the model's capabilities.

- GPT-3.5 (2022) launched with strong conversational abilities at a low price point. It worked well for simple tasks but fell short on complex reasoning. If you tried building production agents with it, you likely ran into these limitations quickly.

- GPT-4 (2024-2025) arrived with close to 1 trillion parameters and fixed many of GPT-3.5's problems. The model handled complex reasoning more reliably, followed instructions more accurately, and hallucinated less. OpenAI released several variants: GPT-4o improved response times, GPT-4 Turbo expanded the context window to 128,000 tokens, and GPT-4.1 demonstrated strong real-world performance, completing 62% of agent tasks successfully in testing.

- GPT-4.5 (Orion) came next with stepwise logic processing and better context handling. It essentially bridged the gap to GPT-5, testing out architectural changes that would become core to the next release.

- GPT-5 (August 2025) launched with a unified architecture that routes queries automatically between two modes. GPT-5-main handles straightforward tasks quickly. GPT-5 thinking kicks in for complex problems that need step-by-step logic. You don't choose which one to use—the system figures it out based on your query. The model comes in three variants: regular delivers maximum capability, mini balances performance with cost, and nano handles high volumes of simple tasks at the lowest price.

Each Claude release built on what came before while adding capabilities that made the model more practical for real-world applications.

- Claude 1 (March 2023) launched with solid conversational abilities but lacked the depth of reasoning needed for complex agent workflows. If you tried building production systems with it, you probably found its limitations quickly.

- Claude 2 (2023-2024) arrived with major improvements in document analysis and reasoning. The larger context window lets you process more information per request, making Claude 2 more viable for production use.

- Claude 3 (March 2024) marked a substantial leap forward. Anthropic released three variants: Haiku handled fast, affordable tasks; Sonnet balanced performance and cost; and Opus delivered maximum capability. The release added support for text and images in a single request, and Opus expanded the context window to 200,000 tokens. Claude 3 Opus demonstrated reasoning skills that put it among the best models available.

- Claude 4 (May 2025) arrived with Opus 4 and Sonnet 4. Both models maintained the 200,000-token context window while improving their handling of complex workflows and sustained reasoning tasks. Claude Sonnet 4 excelled at selecting appropriate APIs and functions during task execution, achieving 92% accuracy, the best performance among the tested models.

- Claude 4.5 (September-October 2025) launched with notable upgrades. Sonnet 4.5 introduced major improvements in coding and workflow automation, enabling it to maintain focus on a single task for over 30 hours. Haiku 4.5 delivered near-flagship performance at twice the speed and one-third the cost of Sonnet 4.

Key Features

GPT-5 and Claude 4.5 took different approaches to solving core agent system challenges—OpenAI prioritizing automatic optimization and multimodal capabilities, Claude focusing on extended context and sustained reasoning.

Context and Reasoning Capabilities

GPT-5:

- Context window exceeds 256,000 tokens (roughly 150-200 pages per request)

- Built-in chain-of-thought breaks complex problems into logical steps

- Automatic routing between fast and reasoning modes based on query complexity

- Enhanced memory maintains context across conversations

Claude 4.5:

- Context window reaches 200,000 tokens for top-tier models

- Some use cases support up to 1 million tokens

- Extended thinking mode with controllable reasoning depth for Sonnet 4.5 and Haiku 4.5

- Sonnet 4.5 maintains focus for 30+ hour workflows without losing context

Model Variants and Flexibility

GPT-5:

- Three variants: regular (maximum capability), mini (balanced), nano (high-volume simple tasks)

- Native multimodal support handles text, images, audio, and potentially video in one API call

- Strong performance across coding, analysis, writing, and decision-making tasks

Claude 4.5:

- Three variants: Opus (maximum capability), Sonnet (balanced), Haiku (fast and affordable)

- Haiku 4.5 delivers Sonnet 4-level coding at 2x speed and 1/3 cost

- Native multimodal support handles text and images

- Strong performance on document analysis, coding, and complex reasoning

- Knowledge cutoff around February 2025

Specialized Capabilities

GPT-5:

- Automatic routing eliminates choice between fast model with poor reasoning or reasoning model with slow responses

- System switches seamlessly based on each query's specific needs

Claude 4.5:

- Native "computer use" lets the model interact with desktop applications through API calls

- 200,000 token context window handles lengthy RFPs or maintains state across long workflows without complex chunking strategies

- All variants handle deeply contextual tasks requiring sustained attention

The automatic routing in GPT-5 and extended context handling in Claude 4.5 represent distinct architectural advantages—GPT-5 optimizes for query-specific performance, while Claude 4.5 excels at maintaining coherence across extended workflows.

API Access & Pricing

OpenAI and Anthropic both offer token-based pricing through managed API infrastructure, with different rate structures and optimization features.

Setup and Access

Setting up GPT-5 is straightforward: create an OpenAI account, add billing information, and start making API calls. The platform includes detailed documentation, SDKs for major programming languages, and tools for tracking usage and costs.

You can also access GPT-5 through standard ChatGPT subscription plans if you prefer the web interface over direct API integration.

Anthropic provides Claude access through its API, Amazon Bedrock, Google Vertex AI, and third-party gateways. Getting started requires an account with billing configured. Once set up, you can make API calls using your credentials. The platform includes documentation, SDKs, and usage tracking tools.

Pricing Structure

As of August 2025, GPT-5 pricing breaks down as follows: flagship models (GPT-5, o1) cost approximately $15 per million input tokens and $60 per million output tokens. Mini variants run roughly $0.15 per million input tokens and $0.60 per million output tokens.

Nano variants offer lower pricing that varies based on usage volume. OpenAI offers enterprise pricing with volume discounts and dedicated support for high-volume operations.

As of October 2025, Claude pricing is: Opus 4 costs $15 per million input tokens and $75 per million output tokens. Sonnet 4/4.5 runs $3 per million input tokens and $15 per million output tokens.

Haiku 4.5 offers the most economical option at $0.80 per million input tokens and $4 per million output tokens. Opus delivers the highest capability, Sonnet balances performance with affordability for most production use, and Haiku handles high-throughput applications economically.

Cost Optimization and Additional Options

GPT-5's developer platform provides detailed visibility into spending. You can track token consumption across different models and use cases, set up cost alerts, and optimize implementations based on actual usage patterns.

Claude includes cost-saving features that reduce spending at scale. Prompt caching cuts costs by up to 90% for repeated queries. Batch processing can save up to 50% on non-urgent tasks.

Beyond API access, Claude offers subscription plans: Free, Pro ($20/month), Team ($30/user/month), and Enterprise (custom pricing). Higher tiers include better API limits, priority access, and collaboration tools. The platform provides detailed dashboards for tracking usage, analyzing spending patterns, and setting up alerts.

ChatGPT vs Claude: Performance Comparison for AI Agent Development

How these models actually perform in production determines whether your agents meet accuracy requirements, stay within budget, and handle the workflows you're building them for. This comparison examines both models across the criteria that matter for AI agent architects.

Criteria #1: Document Processing and Multi-Document Analysis

Your agents need to process documents—RFPs, technical specifications, compliance documentation—and the model you choose determines how much they can handle at once.

With ChatGPT, you get 128,000 tokens of context, while with GPT-5, you get 5,000 tokens. That's roughly 100 pages per session. Your agents parse knowledge bases, extract insights from PDFs, and generate summaries.

Processing happens quickly, and you're moving through high volumes at a lower cost per session. The model completes individual document tasks efficiently. If your workflows depend on fast turnaround and you're processing dozens of documents daily, ChatGPT's speed and cost efficiency matter.

With Claude, you get 200,000 tokens of context with Claude 4.5's top-tier models (Opus, Sonnet, Haiku). That's roughly 150-200 pages per request.

Your agents handle lengthy legal briefs, cross-reference multiple enterprise dossiers, and maintain full context without losing track halfway through. No chunking strategies. No complicated workarounds. The document fits, and Claude processes it.

The practical difference shows up when documents get long. One 80-page RFP? Both models handle it fine. Five 200-page technical specifications that reference each other?

Claude processes them together. ChatGPT needs you to build chunking logic, manage document segments, and hope context doesn't drop critical details during reassembly.

If your agents regularly process documents exceeding 100 pages or need to cross-reference across multiple lengthy files, Claude's extended context eliminates the need for complex infrastructure.

Claude costs roughly 2.3 times as much per session. You're paying for processing capacity that ChatGPT can't match.

The choice comes down to document volume: ChatGPT for high-volume processing under 100 pages, where speed and cost matter, and Claude when extended context and multi-document analysis justify the premium.

Criteria #2: Code Generation and Technical Implementation

Your agents need to generate integration code, build API wrappers, and automate technical workflows. The model you choose determines how much time you spend debugging versus shipping features.

With ChatGPT, you get broad language coverage and fast iteration with GPT-5 and GPT-4o. The model generates code quickly and integrates smoothly with established development tools like GitHub Copilot and Replit.

You're building API wrappers or prototyping integrations? ChatGPT moves fast. You go from prompt to working code efficiently, keeping your development cycle moving.

With Claude, you get stronger stepwise reasoning with Claude 4.5 Sonnet. The model generates fewer errors during long, multi-file projects.

It maintains architectural understanding across dependencies: tracking relationships between files, understanding how components interact, and producing more consistent code across large codebases.

The trade-off? Slower iteration, but fewer structural errors that require refactoring later.

The practical difference shows up in project complexity. A single API wrapper? Both models handle it fine. A multi-file integration with dependencies across dozens of modules? Claude maintains architectural consistency.

The choice comes down to project complexity: ChatGPT for fast iteration on straightforward integrations where speed matters, and Claude for slower iteration when architectural consistency across complex systems justifies it.

Criteria #3: Tool Selection and API Orchestration

Your agents need to call the right APIs and functions to complete tasks—the model you choose determines how often they get it wrong.

With ChatGPT, you get broad ecosystem access with GPT-5. The model integrates with extensive third-party plugins and native OpenAI Tools (browser, Python, data visualization), and it handles modular orchestration well when using multiple APIs simultaneously.

The advantage shows up in the breadth of integration. Do you need agents who can connect to diverse platforms? ChatGPT's plugin ecosystem gives you more options out of the box. The model works smoothly with established developer tools and third-party services.

With Claude, you get precision over breadth with Claude 4.5. When your agent needs to look up customer data, Claude more reliably calls the correct CRM API rather than attempting database queries. When calculations are required, it consistently uses calculator tools rather than computing in text.

The model reasons more carefully about which tool matches each task, resulting in fewer incorrect API calls. This precision matters in compliance-sensitive environments where tool-selection errors can cause problems.

The challenge? Claude has fewer third-party integrations available. You're working with standard enterprise platforms? Covered. Highly specialized or niche APIs? You might need custom development work.

ChatGPT's broader ecosystem means faster integration across diverse platforms. Claude's deliberate approach to tool selection means fewer errors, especially valuable when compliance matters more than ecosystem breadth.

The choice comes down to integration priorities: ChatGPT for diverse platform connections and broader ecosystem access, and Claude for compliance-sensitive workflows that require precise tool selection.

Criteria #4: Reasoning and Complex Workflow Planning

Your agents need to handle multi-step logic, decision trees, and workflow orchestration—the model you choose determines how well they navigate complexity without getting lost.

With ChatGPT, you get automatic routing between fast execution and deep reasoning with GPT-5. The model switches between GPT-5-main for straightforward tasks and GPT-5-thinking for complex problems requiring step-by-step logic.

This happens automatically based on what your query needs. The automatic routing balances speed with structured logic, handling diverse workflows without manual configuration. The model excels at completing tasks quickly across varying levels of complexity.

With Claude, you get sustained, deliberate reasoning with Claude 4.5 Sonnet and Opus. The model emphasizes deep, reflective analysis with an extended thinking mode that you can control based on how thoroughly you need problems examined.

Claude Sonnet 4.5 maintains focus on single tasks for over 30 hours without losing context—valuable for complex workflows requiring sustained attention. The model prioritizes precision during execution, reasoning carefully through each step before moving forward.

The choice comes down to workflow complexity: ChatGPT for fast task completion across varied workflows with automatic optimization, and Claude when complex decision trees require sustained reasoning and precision.

Criteria #5: Context Management and Long Conversations

Your agents need to maintain state across extended workflows and multi-turn interactions—the model you choose determines how well they remember what happened earlier without losing critical details.

With ChatGPT, you get 128,000 tokens of context, along with enhanced memory features that retain information across conversations. The model handles extended interactions well for most use cases.

Standard agent sessions with moderate turn counts work without issues. For very long sessions, you'll need more active prompt management to maintain context effectively.

With Claude, you get a 200,000 token window that provides better continuity across entire projects or multi-day dialogues. The model maintains memory integrity and consistent behavior across massive context windows, reducing the need to re-provide background information.

Claude Sonnet 4.5 maintains focus on single tasks for over 30 hours without losing context, which is valuable when workflows require sustained reasoning across extended periods.

The choice comes down to session duration: ChatGPT for short-term interactions with high turn counts where speed matters, and Claude when extended workflows require uninterrupted contextual recall across long sessions.

Criteria #6: Cost Structure and Scaling Economics

Your agents' operational costs determine whether your system stays viable as usage grows; the model you choose affects both initial expenses and long-term budget sustainability.

With ChatGPT, you get lower per-token costs. Flagship models (GPT-5, o1) cost approximately $15 per million input tokens and $60 per million output tokens. Mini variants run $0.15 per million input tokens and $0.60 per million output tokens.

The model also completes sessions faster, meaning lower API costs and better throughput when processing high volumes. For operations running thousands of agent sessions daily, these cost differences compound significantly.

With Claude, you get higher per-session costs but optimization features that reduce expenses at scale. Claude Opus 4 costs $15 per million input tokens and $75 per million output tokens. Claude Sonnet 4/4.5 runs $3 per million input tokens and $15 per million output tokens.

Claude Haiku 4.5 offers the most economical option at $0.80 per million input tokens and $4 per million output tokens.

However, Claude offers prompt caching (up to 90% savings for repeated queries) and batch processing (up to 50% savings for non-urgent tasks). These features become valuable at the production scale.

Subscription plans cost the same for web access—both offer $20/month pro/plus plans with custom enterprise pricing.

The choice comes down to operational scale: ChatGPT for high-volume operations with tight budgets, where lower base costs matter, and Claude for data-intensive workloads that justify higher costs through optimization features like prompt caching.

Questions to Ask Before You Decide

Before choosing between ChatGPT and Claude for your AI agent systems, ask these questions:

- "How many pages do our agents typically process per session?" For under 100 pages, favor ChatGPT's speed and cost. Over 100 pages regularly favor Claude's extended context without chunking.

- "Are we building single-file integrations or complex multi-file systems?" Quick API wrappers and prototypes favor ChatGPT's iteration speed. Multi-file systems with dozens of dependencies favor Claude's architectural consistency.

- "How often do incorrect API calls create compliance problems?" Standard integrations with room for error favor ChatGPT's broader ecosystem. Compliance-sensitive workflows favor Claude's deliberate tool selection.

- "Do tasks typically finish in minutes or run for hours?" Fast completion across varied tasks favors ChatGPT's automatic routing. Complex workflows that require sustained focus favor Claude's 30+ hours of context maintenance.

- "Are we running short on agent sessions or extended dialogues?" Quick interactions with moderate turns favor ChatGPT's efficiency. Multi-day projects requiring continuity favor Claude's extended context window.

- "What's our daily session volume?" Thousands of sessions daily with tight budgets favor ChatGPT's lower base costs. Hundreds of complex sessions where accuracy justifies premium pricing favor Claude.

- "Do we process the same queries repeatedly?" Mostly unique requests favor ChatGPT's straightforward pricing. Repeated workflows at scale favor Claude's prompt caching (90% savings).

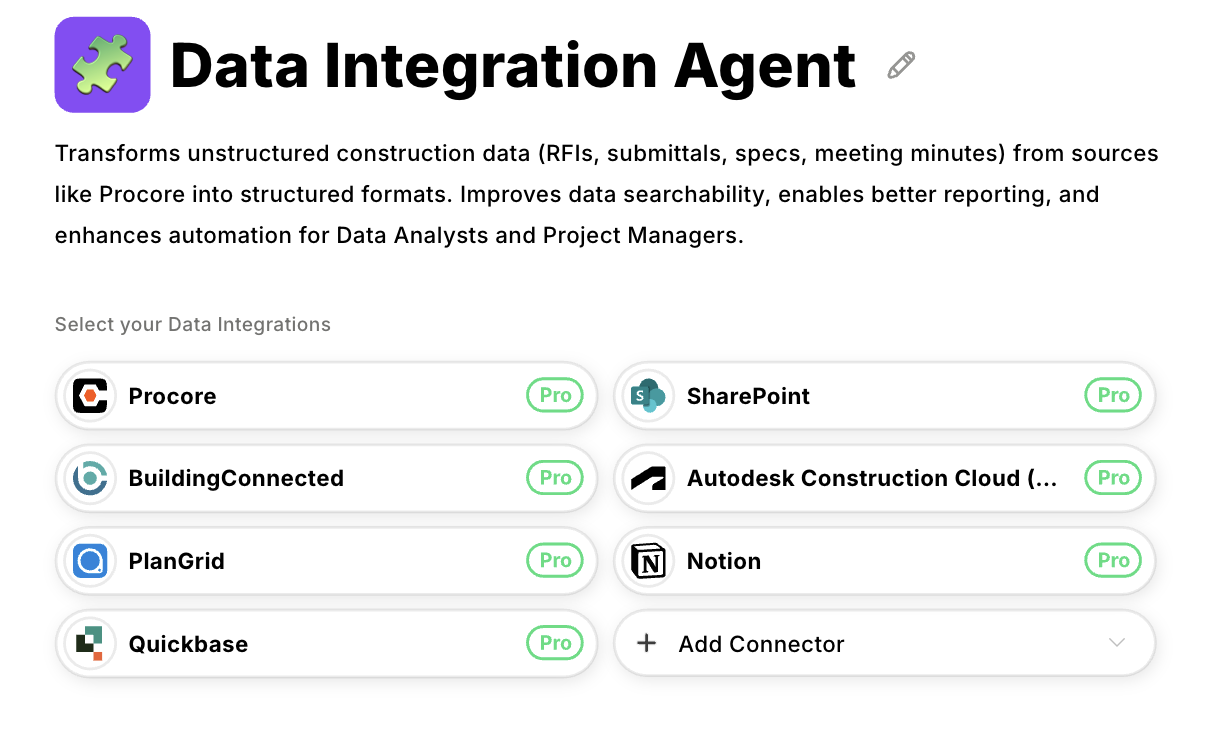

Datagrid’s AI Agents Automate Data Integration and Workflow Orchestration

You've chosen your model. Now comes the infrastructure work: connecting agents to Salesforce, HubSpot, Google Drive, and dozens of other platforms via custom connectors, and maintaining those integrations as APIs change. Your tenth agent needs fifteen integrations, and maintenance becomes a full-time job.

Datagrid provides pre-built AI agents with unified data access across 100+ enterprise platforms. Instead of building custom connectors, you configure connections through a single interface. When APIs change, Datagrid maintains the integrations while your team focuses on agent logic.

- Unified data access: Connect agents to Salesforce, HubSpot, Google Drive, Slack, and 100+ platforms without building custom integrations. Authentication, rate limiting, and data transformation happen automatically in the background.

- Pre-built agent capabilities: Deploy specialized agents for document processing, RFP analysis, and workflow automation immediately. Datagrid's agents handle infrastructure while you customize intelligence for your specific use cases.

- Managed orchestration: Build complex multi-step workflows without managing state, error handling, and retry logic yourself. The platform handles orchestration while you define business logic.

- Scalable architecture: Add new agents and data sources without exponentially increasing maintenance burden. Datagrid manages integration health across your entire agent ecosystem.

Your model choice—ChatGPT or Claude—determines agent capability. Datagrid determines whether you're building infrastructure or building intelligence.

Create a free Datagrid account