The complete guide for AI product managers shipping agent systems. Deploy your first agent in days, scale without integration hell, measure business impact.

Your calendar says "AI strategy," but your hours disappear inside brittle integrations and one-off data fixes. This guide shows you how to ship production-ready agents despite data silos, build orchestration frameworks that scale without exponential complexity, and translate technical metrics into stakeholder language that unlocks budget.

What you'll learn: This guide covers six essential areas for AI product managers: deploying your first agent without custom connectors, understanding production architecture requirements, aligning stakeholders and building business cases, measuring both technical and business impact, scaling systems without integration complexity, and implementing responsible deployment practices.

Prerequisites: This guide assumes familiarity with basic machine learning concepts (models, training, accuracy) and product management fundamentals (roadmaps, stakeholder management, metrics). No coding expertise required.

Deploy Your First AI Agent Without Building Custom Connectors

The five-phase deployment sprint sidesteps custom-connector traps while delivering measurable value:

Scope a high-impact workflow targeting repetitive, data-rich tasks where automation creates disproportionate ROI—invoice classification, support-ticket triage, prospect enrichment. Map current pain points and desired outcomes.

Audit data access and quality by verifying source systems expose required fields. Sample data for completeness, bias, and freshness. Understanding data pipeline requirements prevents production surprises.

Choose unified integration architecture over point-to-point connectors. A unified data layer (also called a data integration layer) is a single access point that exposes domain objects through governed APIs, eliminating the need for custom connectors between each agent and data source. This thin layer, owned by a small platform group, outlives any single automation and avoids maintenance thrash. Centralized platform thinking concentrates expertise and shared infrastructure.

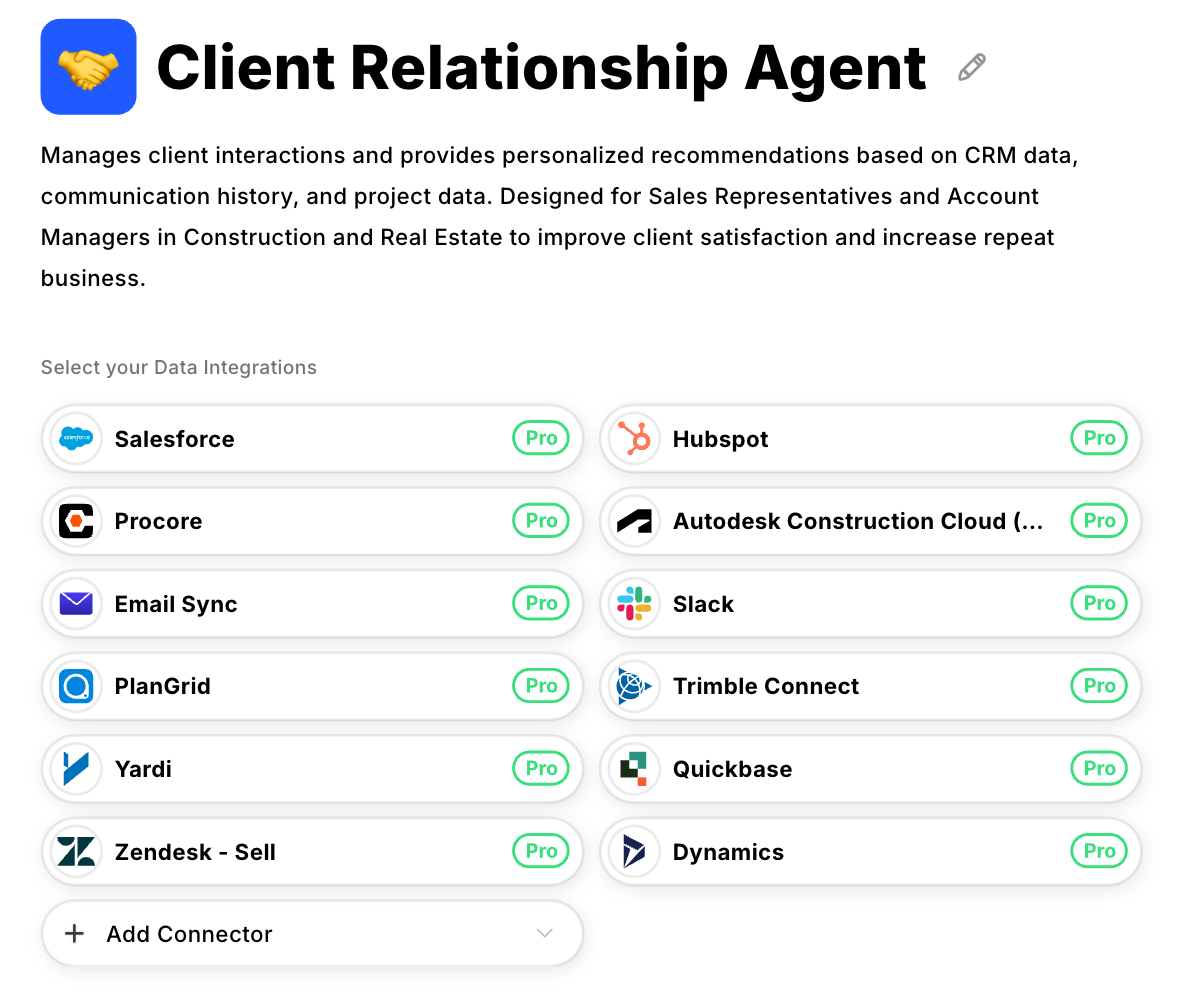

Platforms like Datagrid provide pre-built unified data layers with connections to 100+ business systems, allowing teams to deploy agents without building integration infrastructure from scratch.

Ship MVP using workflow automation tools or language-model toolkits. Keep scope minimal: one trigger, one decision, one action. Clear, context-rich prompts steer models toward usable outputs.

Instrument before production with dual metrics tying technical KPIs (accuracy, latency, robustness) to business outcomes like minutes saved or error rates cut. Real-time dashboards plus automated drift alerts enable continuous evaluation.

Skip common bottlenecks

Pull data via existing exports or reporting APIs first; upgrade to event streams after proving value. Package read-only data-access plans and governance checklists up front to demonstrate privacy guardrails early. Expose decision logs and confidence scores in plain English to counter "black box" anxiety.

A first system that automatically classifies emails, enriches CRM records, or validates purchase orders demonstrates value in days while the unified data layer creates headroom for future automation.

Key Takeaway: Deploy your first AI agent by focusing on one high-impact workflow, securing data access through unified integration architecture, and instrumenting dual metrics (technical and business) before production launch.

Build Production-Ready Agent Systems

Once you've deployed your first agent, the next challenge is building architecture that scales beyond proof-of-concept. Production agent systems require different technical decisions than traditional machine learning deployments.

Build systems that coordinate multiple agents

Single-model deployments route inputs to one endpoint and return predictions. Agent systems coordinate multiple specialized models, data sources, and business logic across workflows that span minutes or days. Your architecture must handle task queues, retry logic, state persistence, and context sharing between components that may not finish simultaneously.

This coordination complexity (also called orchestration) demands purpose-built infrastructure. Lightweight runtimes manage dependencies so document-extraction agents can hand structured data to enrichment agents, which then trigger workflow-routing decisions. When one component fails or returns low-confidence results (outputs below a predefined threshold like 0.65), the system routes tasks to human review queues instead of forcing automation.

Coordinate multiple models efficiently

Production systems rarely rely on a single foundation model. Research shows that different models excel at different tasks—vision models extract text from PDFs, lightweight classifiers handle sentiment, large language models synthesize summaries. Your architecture must route requests to the most efficient model that meets quality requirements, optimizing for speed and cost without sacrificing accuracy.

Keep models single-purpose and composable. When compliance requirements change, you swap the clause-extraction model without rewriting workflow logic. This modularity protects production systems from constant refactoring as capabilities evolve.

Implement unified data layers to prevent scaling failures

Point-to-point connectors trap you in integration maintenance. A unified data layer exposes clean domain objects—Account, Ticket, Requirement—through governed APIs. The schema stays stable, so new systems read and write data without integration sprints. When finance introduces NetSuite next quarter, teams map it once and every existing workflow instantly understands the new data source.

Unified Data Layer vs. Point-to-Point Connectors:

- Unified approach: One integration point, all agents benefit, scales linearly with new data sources

- Point-to-point approach: Each agent needs its own connection to each data source, creating hundreds of custom integrations that require constant maintenance and break whenever source systems change

Implementation requires change-data-capture pipelines (systems that stream data updates in near real-time) plus schema-on-read (flexible parsing that handles source changes without downtime). Each record carries metadata (source, timestamp, sensitivity label) enabling automatic access control and audit logging. This architecture creates a single source of truth that automation can trust.

Design human-in-the-loop oversight proportional to risk

Automated workflows demand oversight boundaries proportional to risk. Precision-critical tasks like regulatory filings require human pre-approval. Standard processes like invoice approvals run automatically with audit trails and sample reviews. High-volume operations like lead enrichment operate continuously with metric monitoring. Experimental features remain internal until proven reliable.

This risk-proportional structure gives stakeholders clear rationale for human involvement while maintaining processing efficiency. Your architecture must support configurable review thresholds—route outputs below confidence score 0.65 to human queues, log all decisions for audit, and capture reviewer feedback as training data.

Key Takeaway: Production agent systems require coordination infrastructure for multi-model workflows, unified data layers to prevent integration sprawl, and risk-proportional human oversight with configurable review thresholds.

How to Align Stakeholders and Build Business Cases

Understanding production architecture is only half the challenge. The other half is securing stakeholder buy-in and building financial justification that unlocks budget and resources.

How do AI product managers prioritize agent workflows?

AI product managers may find it helpful to assess agent workflows by considering operational attributes such as data accessibility (e.g., how easily required information can be accessed) and process complexity (e.g., the number of branches, approval gates, and exception paths). Workflows with greater accessibility and simpler processes can often be optimized more rapidly, delivering quick wins.

How do AI product managers calculate ROI for agent projects?

AI product managers present returns across three dimensions: time saved (hours teams spend on manual steps that agents will handle), error reduction (rework costs from manual entry mistakes that automation prevents), and scaling capacity (additional transaction volume the business can handle without hiring).

Agent ROI Calculation Framework:

- Benefits: Time saved (hours × wage rate) + Error reduction (rework costs avoided) + Capacity gains (revenue from additional volume)

- Costs: API fees + Compute expenses + Integration effort + Ongoing maintenance

- Presentation: Payback period in months + Net annual benefit for executive comparison

Combine these gains with costs (API fees, compute, integration lift). Balance ambitious initiatives with practical constraints by presenting payback period in months and net annual benefit.

Map investment against Explore → Pilot → Scale → Optimize cadence. Exploration validates data availability and drafts lightweight prompts. Piloting ships one system on a narrow workflow slice (perhaps enriching new leads only, not the entire CRM). Scaling expands coverage, introduces fallback logic, and integrates monitoring. Optimization tunes prompts, adds multi-model routing, and hardens security reviews.

Real-world ROI examples:

Sales operations: An enrichment system auto-populating firmographics freed three sales reps from manual research work. Each rep gained 8 hours weekly for direct selling activities. The system drove $240,000 in additional pipeline within the first quarter.

Project management: Automation converting client emails into structured tickets reduced ticket-creation time by 70%. Project managers who previously spent 15 hours weekly on data entry now spend 4.5 hours, freeing 10.5 hours for strategic client work.

Map stakeholders and translate metrics effectively

Map every voice that can accelerate or stall work using Impact-vs-Influence grid:

- High impact / high influence (executive sponsor, security lead)

- High impact / low influence (front-line users)

- Low impact / high influence (enterprise architects)

- Low impact / low influence (adjacent teams needing awareness)

Interview each group about workflow pain, feared risks, and desired wins. Translate metrics into outcomes each stakeholder tracks. Security leads hear "token-level PII redaction" as "JSON logs making audits take seconds instead of days." Connect accuracy metrics to existing KPIs like average handle time or revenue per rep.

Structure meeting cadence for continuous alignment

Three recurring sessions maintain alignment:

Discovery workshop (one-time, 2 hours) aligns on problem statement, data access boundaries, and success metrics.

Integration checkpoints (weekly, 30 minutes) let engineers demo improvements while data owners validate accuracy through trace logs.

Deployment reviews (monthly, 60 minutes) present metric trends in business language, surface risks, and confirm go/no-go decisions for rollout.

Negotiate data access with layered security controls

Offer graduated access options—read-only S3 buckets, time-boxed shared datasets, tokenized tables—paired with governance mechanisms: encryption at rest, access logs, row-level masking. Position yourself as partner in risk reduction. Small technical concessions, explained in business terms, often dismantle outsized resistance.

Key Takeaway: Successful agent initiatives require workflow prioritization using data accessibility and process complexity, ROI calculations that translate technical metrics into financial outcomes (time saved, error reduction, capacity gains), and stakeholder mapping that addresses each group's specific concerns.

How to Measure AI Agent Performance and Business Impact

With stakeholders aligned and agents deployed, the next challenge is proving ongoing value through measurement systems that speak both engineering and finance languages.

AI Agent Measurement Framework:

- Technical metrics (Layer 1): Accuracy, precision, latency per record, throughput, data quality (null rates, schema drift), robustness under stress

- Business metrics (Layer 2): Time saved (hours eliminated), cost reduction (lower API calls, reduced rework), capacity increases (higher throughput without headcount)

- Translation requirement: Map each technical metric to a financial outcome stakeholders already track

Track agent performance metrics

Track accuracy and precision—are extracted fields correct? Quantitative evaluation metrics benchmark performance daily. Monitor processing speed (latency per record, throughput), data quality (null rates, schema drift, duplication), and robustness under stress through scenario-based tests.

Translate metrics into business outcomes

AI product managers must map technical measures to financial levers that executives recognize.

Time saved calculates labor hours eliminated when accuracy improvements skip manual review steps. If a more accurate agent processes 2,000 documents weekly with minimal human verification, multiply the minutes saved per document by employee wage rates to show dollar impact.

Cost reduction appears in two forms. Faster processing reduces API calls and compute expenses since fewer retries and corrections are needed. Lower error rates cut rework costs because teams spend less time fixing mistakes that automation prevents.

Capacity increases measure how higher throughput allows the business to handle more transaction volume without hiring additional staff. An agent that processes invoices twice as fast effectively doubles your accounts payable capacity without expanding headcount.

Translation example: A document-classification system improved from 88% to 94% accuracy. This 6-percentage-point gain plausibly reduced false-positive rates and saved ninety seconds of manual triage per record. The math: 2,000 documents weekly × 1.5 minutes saved × 4 weeks = 200 labor hours monthly. At $60 per hour, the accuracy improvement generated $12,000 in monthly savings. This translates to a 15-month payback period for the initial agent investment.

Monitor continuously and trigger retraining

Wire technical metrics into real-time dashboards. Production patterns stream live metrics and error logs to shared views, trigger automated alerts when accuracy, latency, or drift crosses thresholds, and tag anomalies with context (input sample, model version, upstream system) so engineers act immediately.

No matter how good the automation, regulated or high-risk actions need human oversight. Recommended frameworks use escalation logic such as mandatory human approval for low-confidence outputs (confidence score < 0.65) and regular audits comparing human and system decisions to refine evaluation rubrics. Some organizations may also implement periodic spot checks on transactions.

Watch three retraining signals: data drift (changes in data distribution indicating stale training data), performance decay (sustained KPI drops showing the model needs updating), and business requirement changes (new compliance rules or pricing updates that invalidate existing logic).

Key Takeaway: Effective measurement requires dual-layer frameworks tracking both technical health (accuracy, latency, data quality) and business impact (time saved, cost reduced, capacity gained), with continuous monitoring that triggers alerts when performance degrades or data distribution shifts.

How to Scale AI Agent Systems Without Custom Integration Complexity

Once you've proven value with early agents and established measurement practices, the challenge shifts to scaling from one system to dozens without drowning in custom integration work.

When to use unified data layers: Teams should implement unified data layers when managing 3+ agents, when data sources change frequently, when security reviews bottleneck every deployment, or when integration maintenance backlog exceeds development capacity for new features.

Implement unified data layers

Instead of wiring each workflow to individual platforms, expose clean domain objects through governed APIs. The schema stays stable, so new systems read and write data without integration sprints. When finance introduces NetSuite next quarter, teams map it once and every existing workflow instantly understands the new data source.

Change-data-capture pipelines (systems that stream data updates in near real-time) handle continuous synchronization while schema-on-read (flexible parsing that handles source changes without downtime) manages system evolution. Each record carries metadata (source, timestamp, sensitivity label) enabling automatic access control and audit logging. This architecture creates a single source of truth that automation can trust.

Agent platforms like Datagrid handle this integration complexity by providing specialized agents that already connect to major CRM, project management, and document storage systems, reducing implementation time from months to hours.

Set up agent coordination with developer-friendly tools

Lightweight runtimes handle task queues, retries, and context sharing between specialized systems. Keep payloads typed and idempotent; expose versioned endpoints so QA pins to v1 while v2 experiments. Local simulators speed prompt tuning and error analysis.

The security foundation is built into every layer. Every data layer call passes through a policy engine that enforces role-based access, field-level encryption, and audit logging. Systems see only the minimum data slice they need for their specific function.

Follow the four-stage scaling roadmap

Single Agent replaces one manual workflow. Teams should aim for at least 90% accuracy and a payback period under six months. Advance to the next stage when the agent maintains consistent performance over two release cycles without degradation.

Agent Expansion deploys 2-3 specialized systems that share a unified data layer. Teams should target 500+ hours saved quarterly across all systems combined. Advance when throughput remains stable across systems without performance degradation.

Coordinated Multi-Agent Systems connect multiple agents with dependency logic, where one agent's output becomes another's input. Teams may set goals such as achieving high rates of cross-system handoffs without human intervention, and advancing when business metrics improve significantly (for example, by around 30%) without requiring additional headcount. However, these targets are organization-specific and not formal industry standards.

Enterprise Platform offers self-serve templates under a common governance framework. Teams should enable new automation to launch in under two weeks from concept to production. Advance when more than 50% of new requests can be fulfilled by reusing existing patterns rather than requiring custom development.

Structure teams for scalable growth

Start with lean squad (one PM, one engineer, one data scientist) for proof of concept. Scale through hybrid model: centralized platform group owns data layer and orchestration runtime while embedded squads build domain automation. For customer-facing flows, embed specialists inside cross-functional squads.

Avoid common scaling failures

Most organizations encounter predictable failure patterns as they scale. Custom connectors multiply faster than teams can maintain them. No single owner governs data contracts, leading to schema drift across systems. Performance degrades silently without comprehensive monitoring. Security reviews create bottlenecks that block every deployment. Teams duplicate effort by tuning similar prompts in isolation rather than sharing learnings.

Platform thinking prevents failures. Standardized ingestion pipelines and reusable transformation libraries mean new systems inherit proven patterns. Shared evaluation harness enables consistent benchmarking. Governance becomes automated: policy as code, backward-compatible schema migrations, alerts paging the right owner.

Key Takeaway: Scaling agent systems requires unified data layers that eliminate custom connector sprawl, developer-friendly coordination tools, clear advancement criteria across four maturity stages (Single Agent → Agent Expansion → Coordinated Systems → Enterprise Platform), and platform thinking that treats integration as core infrastructure rather than one-off projects.

Deploy AI Agents Responsibly and Fix Common Failures

With production systems scaled across your organization, the final challenge is maintaining trust through responsible deployment practices and effective troubleshooting when issues arise.

Implement core oversight requirements

Define success in dual terms—technical accuracy and user impact—before coding. Run bias audits on training datasets using modern evaluation tools flagging skew and leakage early. Document decision logic in plain language for non-technical stakeholders.

Set up live monitoring for latency, errors, and drift. Embed human-in-the-loop circuit breakers for high-stakes actions—route tasks below confidence thresholds to operators.

Make agent decisions transparent with human oversight

While leading AI ethics and deployment guidelines require system-level transparency and explainability, they do not mandate that every AI agent output includes an explanation of the top features, documents, or prompts influencing the answer. Lightweight provenance tags satisfy both debugging needs and audit requirements. During early pilots, share these explanations in Slack or email digests so business owners see exactly why the system enriched a record or flagged a contract clause.

When your system drafts legal language, routes high-value invoices, or modifies customer records, route outputs through a reviewer queue. The reviewer's accept/reject actions become labeled data for the next training cycle, closing the feedback loop. Start with 100% review, then taper to spot checks as accuracy climbs.

Troubleshooting guide

Manage uncertainty proactively

Treat uncertainty as a continuous variable rather than a binary risk. Combine passive telemetry (user accept rates, correction patterns, error logs) with proactive probes—scenario tests, adversarial inputs, red-team drills—to reveal blind spots before they impact users. When monitoring dashboards flag confidence decay or accuracy drops, schedule a retraining sprint immediately instead of waiting for user complaints.

Key Takeaway: Responsible agent deployment requires defining success in both technical and business terms, running bias audits early, providing explanations for every decision, routing high-stakes actions through human review, and treating uncertainty as a continuous variable that demands proactive monitoring rather than reactive fixes.

Ship AI Agents Without Integration Complexity

Building the agent systems described in this guide requires solving the data integration challenge that consumes most development time. Datagrid eliminates this bottleneck:

• Pre-built unified data layer: Connect agents to 100+ business systems through a single integration point instead of building custom connectors for each workflow. Deploy your first agent in days rather than months while avoiding the exponential maintenance burden of point-to-point integrations.

• Specialized agents for common workflows: Access purpose-built agents for document processing, prospect enrichment, and workflow automation that already understand your CRM, project management tools, and document storage systems. Skip the custom development phase and move directly to business value delivery.

• Enterprise-ready orchestration: Coordinate multiple agents with built-in task queues, retry logic, human-in-the-loop review thresholds, and continuous monitoring dashboards. Scale from single agent to enterprise platform without rebuilding coordination infrastructure.

Get started with Datagrid to deploy production agents without spending 80% of your time on data integration.

Quick-Reference: AI Agent System Terminology

Agent: A software worker that decides and acts within workflows by triggering actions, reasoning about data, and executing multi-step processes autonomously.

Model: The decision engine that processes data, such as a classification model that categorizes documents or a large language model that generates text.

Agent coordination (orchestration): The infrastructure that manages multiple tools for task handoffs, retry logic, and context sharing between specialized agents.

Unified data layer (data integration layer): A single access point that exposes domain objects through governed APIs, replacing brittle custom connectors and enabling all agents to access data through one integration point.

Point-to-point integration: Direct wiring between two systems that's quick to build initially but becomes costly to maintain as the number of connections grows exponentially.

Data enrichment: The process of automatically adding external or derived attributes (such as firmographics or sentiment scores) to raw records so agents can make more informed decisions.

Multi-model processing: Using different specialized models—for example, OCR for extracting text from images and LLMs for analyzing that text—within a single workflow.

Human-in-the-loop (HITL): A design pattern where critical actions pause for human review before execution, balancing automation speed with oversight and control.

Agent specialization: Narrowly focused agents (such as "invoice validator" or "contract analyzer") that excel at one specific task rather than generalist systems that attempt many different functions.

Robustness: An agent system's ability to continue functioning correctly when data schemas shift, edge cases appear, or upstream systems change unexpectedly.

Drift monitoring: Continuous checks for changes in data distribution or model performance that might erode accuracy over time, triggering alerts when degradation exceeds thresholds.

Explainability: Surfacing the reasoning or evidence behind an agent's actions to earn stakeholder trust and satisfy compliance requirements through transparency.