Stop wasting time on custom integrations and data prep. Discover 6 infrastructure mistakes that keep AI projects stuck and how unified platforms fix them.

Building AI agent systems for enterprises means deploying intelligent automation that delivers measurable business value. The daily reality looks different.

You spend months building custom API integrations, manually preparing datasets, debugging workflow code, and maintaining point-to-point connections. Each task is technical, but none of them involves building the intelligent agents you were hired to create.

This isn't an isolated problem. MIT research found 95% of generative AI pilots fail to reach production, with infrastructure complexity cited as a primary barrier.

The gap between prototype and production isn't about model capabilities. It's about the invisible infrastructure work that consumes quarters before agents can deploy.

This article breaks down six implementation mistakes that keep AI projects stuck in pilot mode and shows how platforms eliminate infrastructure bottlenecks.

Mistake #1: Building Point-to-Point Integrations

Every new AI use case triggers the same cycle: another custom API connection. Salesforce this week, SharePoint the next, Google Drive after that. Each integration feels manageable until you calculate the real cost. You need 40 to 80 hours per connection for authentication, field normalization, and retry logic.

Multiply that across a dozen enterprise systems, and you've spent months on data plumbing before AI agents process a single record. Six months later, the real costs emerge.

API changes break connections during critical business periods. When developers leave, undocumented endpoints fail silently.

The three-person AI team that should be training agents for document analysis spends its time debugging OAuth flows instead.

Agent projects stall. Business stakeholders expected working prototypes in weeks, but the team is still building data access infrastructure after months.

Each new workflow requiring additional data sources means another custom connector project. The team maintains dozens of integration scripts with different authentication patterns, error handling, and retry logic.

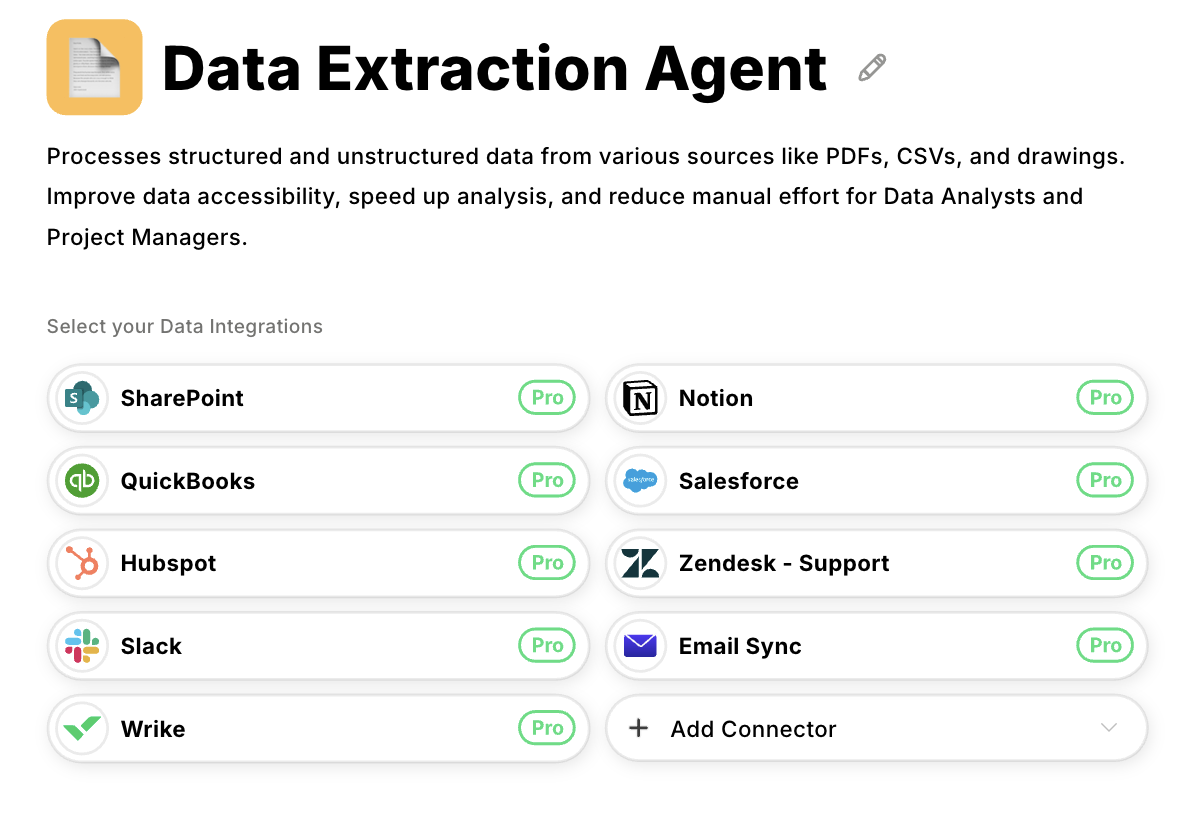

But there is a solution. Instead of building point-to-point connections, you can use unified platforms with pre-built connectors to enterprise systems.

These platforms handle the authentication, error recovery, and ongoing maintenance automatically. Integration time drops from weeks to hours because the infrastructure already exists. When you need to connect a new data source, you configure it rather than code it.

Datagrid provides this infrastructure with native connections to Salesforce, SharePoint, AWS S3, and 100+ other enterprise systems.

You can deploy agents that access organizational data in days rather than quarters. Adding new data sources takes hours instead of development sprints.

Mistake #2: Attempting Manual Data Enrichment at Enterprise Scale

Organizations prepare data for agents manually, reviewing records, researching missing information, standardizing formats, and labeling datasets.

Teams assign people to enrich contact records from LinkedIn, verify company information from websites, extract metadata from documents, and categorize unstructured text.

Here's where the problem starts. A single record takes 12 minutes to enrich across multiple sources. For 1,000 records, that's 200 hours, five solid weeks before agents can touch the data.

Documents are worse. Processing 10,000 PDFs manually creates backlogs measured in months, not weeks. Agent training projects sit waiting for clean datasets while new data keeps arriving. The backlog grows faster than teams can clear it.

The situation compounds quickly. AI teams can't build workflows because all their capacity goes to data preparation. Three months later, teams are still cleaning data. Meanwhile, competitors with automated systems are already deploying agents and learning what works.

The downstream effects become obvious. Models trained on incomplete data produce unreliable results. Business teams test the agents, see poor accuracy, and lose confidence.

But there's a different approach. Automated enrichment processes thousands of records overnight, pulling from multiple sources, checking accuracy, and keeping data current. Work that took 200 hours completes while teams sleep.

Datagrid agents handle the preparation automatically, cleaning files, removing duplicates, adding firmographic data, and standardizing formats. What took months to do manually now takes days.

The speed difference matters. Automated enrichment lets teams deploy five to ten times faster than manual approaches. Every week spent manually preparing data is a week competitors spend improving deployed capabilities.

Mistake #3: Deploying Sequential Processing for High-Volume Document Workflows

Organizations deploy agents that process documents one at a time, analyze them, extract information, generate output, and then move to the next document. Sequential processing works fine during proof-of-concept with a dozen documents. Teams assume it scales to enterprise volumes.

Here's where things break down. A single RFP takes 15-20 minutes to analyze. Processing 1,000 documents sequentially requires nearly two weeks of continuous operation. The real problem isn't just speed—it's unpredictability.

Teams can't promise turnaround times because they don't know where documents sit in the queue. A proposal manager submits an urgent RFP and gets the same response as routine work: "It's in the queue."

The operational breakdown happens fast. Urgent requests sit behind routine documents. Business teams watch competitors respond to opportunities faster. They start bypassing the AI system entirely, going back to manual processing because at least they control the timeline. The system that should accelerate operations becomes the bottleneck nobody trusts.

Parallel processing eliminates the throughput bottleneck. Multiple specialized agents handle thousands of documents simultaneously. Processing time becomes predictable. A backlog that takes weeks to complete sequentially can take hours with parallel execution.

Datagrid orchestrates this parallel work across specialized agents. One extracts tables, another analyzes requirements, and another cross-references historical data, all running simultaneously. Processing that takes days sequentially completes in hours.

Teams respond to competitive bids the same day and meet compliance deadlines that sequential systems miss.

Mistake #4: Training General-Purpose Agents without Domain Specialization

AI teams build general-purpose agents trained on broad datasets, aiming to create flexible systems that handle diverse tasks across industries.

The logic seems sound: a single agent capable of processing any document type, analyzing any data format, and handling any business workflow eliminates the need to maintain multiple specialized systems.

Here's where performance breaks down. General agents processing insurance claims achieve 70-75% accuracy because they lack domain-specific understanding of policy types, coverage terminology, and regulatory requirements.

When analyzing construction RFPs, they miss critical specifications buried in technical requirements because they don't recognize industry-standard terminology. When processing financial documents, they fail to extract data in the required compliance formats.

Business teams refuse to deploy agents that require manual review of every output—the automation provides no real benefit. AI teams enter endless training cycles: collect more data, retrain models, test again, discover new accuracy problems, repeat.

Each cycle takes weeks. The model grows more complex and more challenging to maintain but still underperforms in specialized domains. Teams that successfully deploy agents take a different approach.

They use purpose-built agents trained specifically for RFP analysis, PDF data extraction, or insurance claim processing. These specialized agents achieve 95%+ accuracy because they understand domain-specific requirements, terminology, and regulations.

They reach production faster by focusing on narrow, well-defined capabilities instead of attempting broad general intelligence.

Datagrid provides pre-trained specialists for RFP analysis, PDF data extraction, and document cross-referencing, each with domain-specific knowledge of construction formats, insurance terminology, and regulatory frameworks. Teams deploy these agents in days rather than spending months training general models.

Mistake #5: Managing Agent Workflows Through Custom Code

Organizations write custom code for each agent workflow, Python scripts, Node.js applications, or integration platforms requiring programming for every automation sequence.

Teams code each step: extract data from documents, enrich information, validate against rules, trigger notifications, and update systems. A moderately complex workflow takes 40-60 hours to develop with error handling, logging, retry logic, and testing.

The maintenance burden grows over time. Each requirement change means updating code, testing, and redeployment. Twenty workflows across different business processes create hundreds of code files with complex dependencies.

A developer who built a critical workflow leaves, and knowledge about the custom retry logic disappears with them. API responses change, and workflows break in production.

Here's a common scenario. A customer churn prediction workflow pulls engagement metrics from the CRM, analyzes support ticket sentiment, scores account health, and triggers alerts for at-risk customers.

Six months later, the business wants to add product usage data from a new analytics platform.

This simple addition requires updating the data collection script, modifying the scoring algorithm, adjusting the database schema, testing the changes, and redeploying. A quick enhancement becomes a three-week development project.

Unified platforms eliminate this custom code burden. Instead of writing scripts for each workflow, teams use visual workflow designers and pre-built action templates. Changes happen through configuration rather than code deployment. Adding new data sources takes hours instead of weeks.

Datagrid automates workflows where agents track customer churn, generate responses, trigger notifications, and update CRM records through configuration.

Workflow development drops from 40-60 hours to 2-4 hours. Modifications that required week-long development cycles now take hours.

Mistake #6: Committing to a Single AI Model Across All Use Cases

Enterprises standardize on a single AI model across all agent use cases to reduce complexity, consolidate vendor relationships, and align team skills. Organizations select a single model as their enterprise standard and build all agent capabilities on that foundation.

The approach creates trade-offs. Document analysis requires strong reasoning capabilities. Creative content generation needs natural language fluency. High-volume data extraction prioritizes speed and cost efficiency. Each use case has optimal model characteristics, but single-model architectures force one choice for everything.

Consider the cost implications. Premium models excel at complex reasoning but cost 10-15 times as much per token as smaller models. Processing 10,000 documents monthly with a premium model costs $5,000.

Efficient models handle the same volume for $500 when the task is a simple extraction. Using premium models for every workflow creates unsustainable cloud costs. Using only efficient models sacrifices accuracy in complex analysis.

Teams end up choosing between acceptable performance and budget constraints. Premium models provide excellent analysis but make high-volume processing prohibitively expensive. Efficient models offer cost savings but produce lower accuracy for specialized tasks.

Platforms supporting multiple models solve this constraint. Teams match models to tasks: premium models for complex analysis, efficient models for high-volume extraction, specialized models for multimodal processing.

Datagrid enables teams to choose from different LLMs based on task requirements. Model selection happens through configuration rather than architectural redesign.

Take Your AI Projects From Pilot to Production in Weeks

Custom infrastructure limits everything else. Your team can't deploy agents because building integrations takes months. Manual data preparation takes weeks more.

Workflow coding consumes additional capacity. You're stuck between thorough engineering and rapid deployment, but enterprise AI requires both.

Datagrid eliminates these infrastructure bottlenecks.

- Connect to 100+ enterprise systems without custom code: Access Salesforce, SharePoint, AWS S3, and other platforms through pre-built integrations. Work that normally takes your team weeks of development happens through configuration.

- Automate data preparation at scale: Process thousands of records overnight with AI-powered enrichment. What takes weeks of manual research completes while your team sleeps.

- Deploy specialized agents immediately: Use pre-trained agents for RFP analysis, document extraction, and compliance checking. Skip months of training general models and ship domain experts in days.

- Configure workflows instead of coding them: Build multi-step agent coordination through visual designers. Changes that required week-long development cycles now take hours.

- Select optimal models per task: Choose from multiple AI models based on task requirements. Reduce costs 40-60% while maintaining accuracy where it matters.

- Scale agent capacity without infrastructure overhead: Teams managing pilot projects can handle production deployments because infrastructure complexity no longer constrains velocity.

Ready to eliminate infrastructure work from your AI projects?