Learn how to build and track customer health scores without manual data gathering. See how AI agents automate the work that takes CSMs hours daily.

Customer Success Managers spend more time gathering health data than managing relationships. Logging into Zendesk for support tickets, switching to analytics for usage data, checking Stripe for billing signals, and scanning HubSpot for engagement.

This scattered data creates a timing problem. Manual data gathering creates reactive customer success. By the time you compile health data across your portfolio, accounts have changed.

Churn signals appear in support tickets, while engagement drops in analytics, but no one connects the dots because reviews take too long. Health scores enable proactive customer success, but only with continuous visibility, not quarterly reviews that become outdated immediately.

This article covers how to build and track customer health scores without manual data gathering that bottlenecks the process.

What is a Customer Health Score?

A customer health score measures account well-being by combining multiple data points into a single metric.

You're looking at product usage frequency, support ticket patterns, billing status, feature adoption rates, and engagement levels. These signals get aggregated to predict whether an account will renew, expand, or churn.

Think of it as a diagnostic tool. A doctor doesn't just check your temperature; they look at multiple vital signs to understand your overall health. Same principle here.

High scores indicate satisfied, engaged accounts likely to stick around or grow. Low scores signal trouble brewing. Mid-range scores? Those accounts need attention, but aren't in crisis mode yet.

Here's where it gets tricky. Health scores only work when you can actually pull all that information together. Usage data lives in your analytics platform. Support tickets sit in Zendesk. Billing information exists in Stripe.

Engagement metrics are tracked in HubSpot. Communication history spans email threads and meeting notes scattered across systems. Compiling this manually? That's where the real work begins, and where most teams get stuck.

Why is Customer Health Score Important?

Health scores change how customer success works. Instead of reacting to problems, you catch them early. Here's what that looks like:

- Early churn identification saves revenue. Without health scores, you discover risk during renewal conversations—too late for real intervention. With scores, declining accounts surface months early when you can actually do something about it. Retention improves because you catch problems before customers start evaluating alternatives.

- Expansion opportunities stop hiding. High scores plus usage approaching limits? Expansion candidates. They're satisfied and constrained—ideal upsell timing. Manual review rarely catches this because satisfaction data and usage data live in different systems.

- Prioritization becomes objective. Without scores, you're responding to whoever makes the most noise. With scores, you're working from data. The account that dropped 15 points gets more attention than the stable, high-performing account with a louder executive sponsor.

- Portfolio capacity increases. Most CSMs hit a wall around 50-75 accounts manually. Health scores change that. Handle 150+ accounts by reviewing scores and investigating exceptions instead of compiling data for every account repeatedly.

The catch is timing. Quarterly reviews miss what matters most: the gradual declines. An account losing ground slowly over three months looks stable in any single quarterly check. You only see the problem when it's too late.

How is Customer Health Score Calculated?

Health scores combine weighted metrics across multiple categories. Companies adjust the weights based on what predicts churn in their specific business, but a typical formula looks something like this:

Customer Health Score = (Usage × 0.30) + (Feature Adoption × 0.20) + (Support Health × 0.20) + (Payment Status × 0.15) + (Engagement × 0.15)

Where:

- Usage = Product usage frequency and depth

- Feature Adoption = Breadth of features being used

- Support Health = Ticket volume and sentiment trends

- Payment Status = Billing timeliness and payment history

- Engagement = Response rates and meeting attendance

Usage and feature adoption data come from product analytics platforms. You're exporting user logins, session duration, and feature usage, then normalizing metrics so they're comparable.

Feature adoption requires calculating what percentage of available features customers actually use. The data exists but needs to be extracted and calculated.

Support health metrics live in your support platform. You're tracking tickets per month, resolution times, and escalation frequency. Manual gathering means exporting ticket data per account and calculating metrics.

The challenge is volume; hundreds of accounts generating thousands of monthly tickets means significant extraction time.

Payment status data comes from billing systems. You're tracking payment timeliness, failed attempts, and trends in contract value. Export from billing, calculate metrics, and integrate into scoring. Payment data is cleaner than other components, but rarely flows automatically into health calculations.

Engagement data is messiest. It comes from multiple sources, including email tools, calendar systems, and customer success platforms. You're checking multiple systems per account, calculating response rates, and reviewing attendance patterns. The subjective nature makes this valuable but challenging.

Each metric needs normalization. Apply your formula, calculate the score, and repeat for every account. For a few hundred accounts, you're looking at days of work.

5 Best Practices for Building Customer Health Scores

Building effective customer health scores requires solving practical implementation challenges. Here are four best practices that separate continuous, actionable health scoring from quarterly spreadsheet exercises.

Best Practice #1: Automate Multi-Source Data Collection

Compiling customer health data means opening multiple platforms one by one. Check your analytics platform for usage patterns. Switch to Zendesk for support ticket volume. Jump to Stripe for billing status—Open HubSpot for engagement metrics. Review email threads for recent communication context.

Each platform holds one piece. No single system shows you the complete picture.

A comprehensive account review takes 4-6 hours, not just opening platforms, but exporting data, copying information into spreadsheets, and looking for patterns across disconnected systems.

Does declining usage correlate with an increase in support tickets? Manual cross-check required. Low engagement connected to billing issues? Another manual cross-check.

Here's the math problem. Customer success teams managing 100-500 accounts can't sustain this pace. Four hours per comprehensive review times 200 accounts? That's 800 hours of data compilation before you've had a single strategic customer conversation.

The impact shows up in what you miss. Support tickets show frustration while usage metrics decline. But no one connects these dots because the data lives in separate systems.

By the time you finish compiling information from all platforms, account health has already changed. Expansion opportunities slip through; high satisfaction in one system never gets correlated with high usage in another.

Customer success becomes reactive. You discover problems when customers complain or cancel, not proactively through health data patterns.

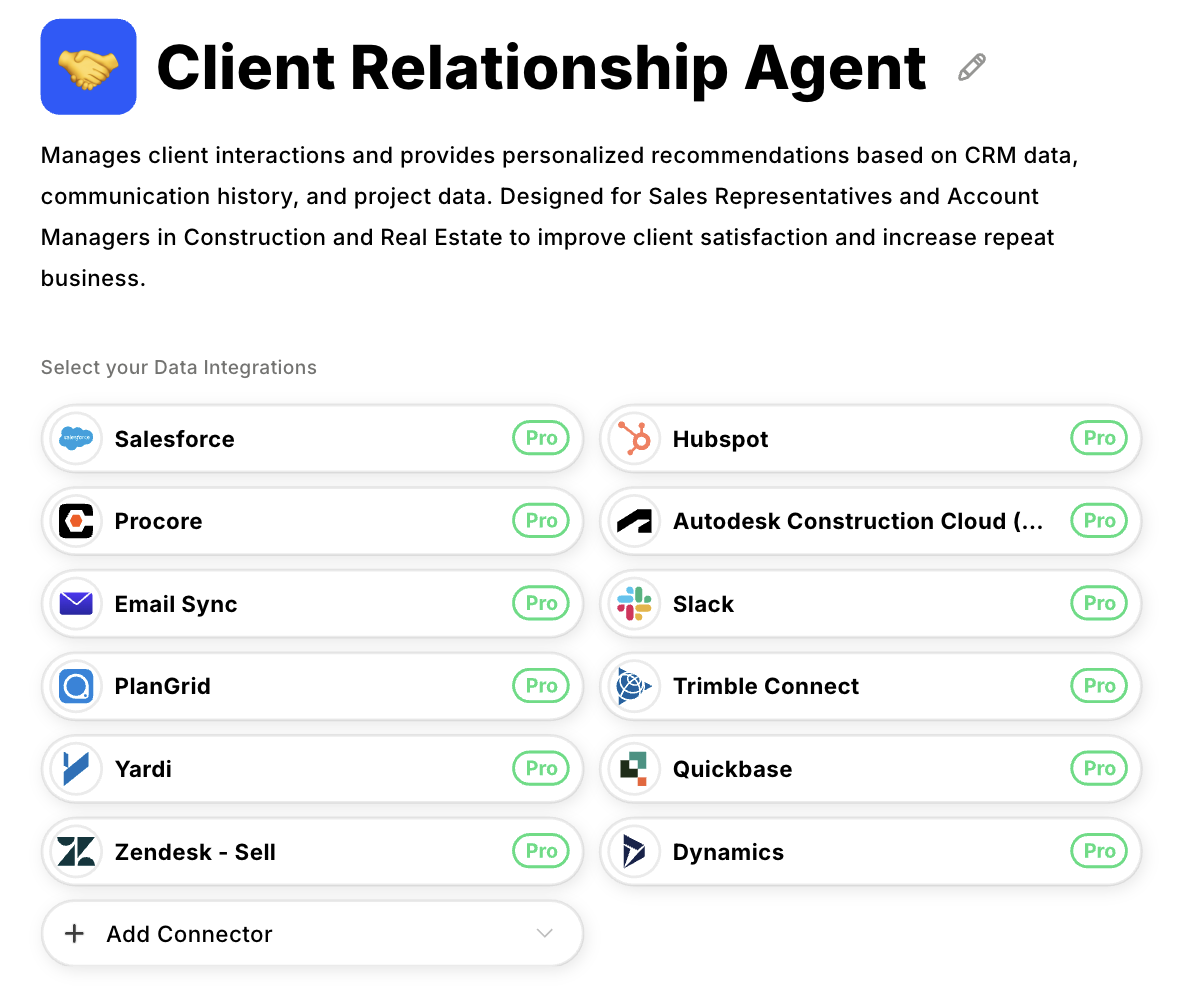

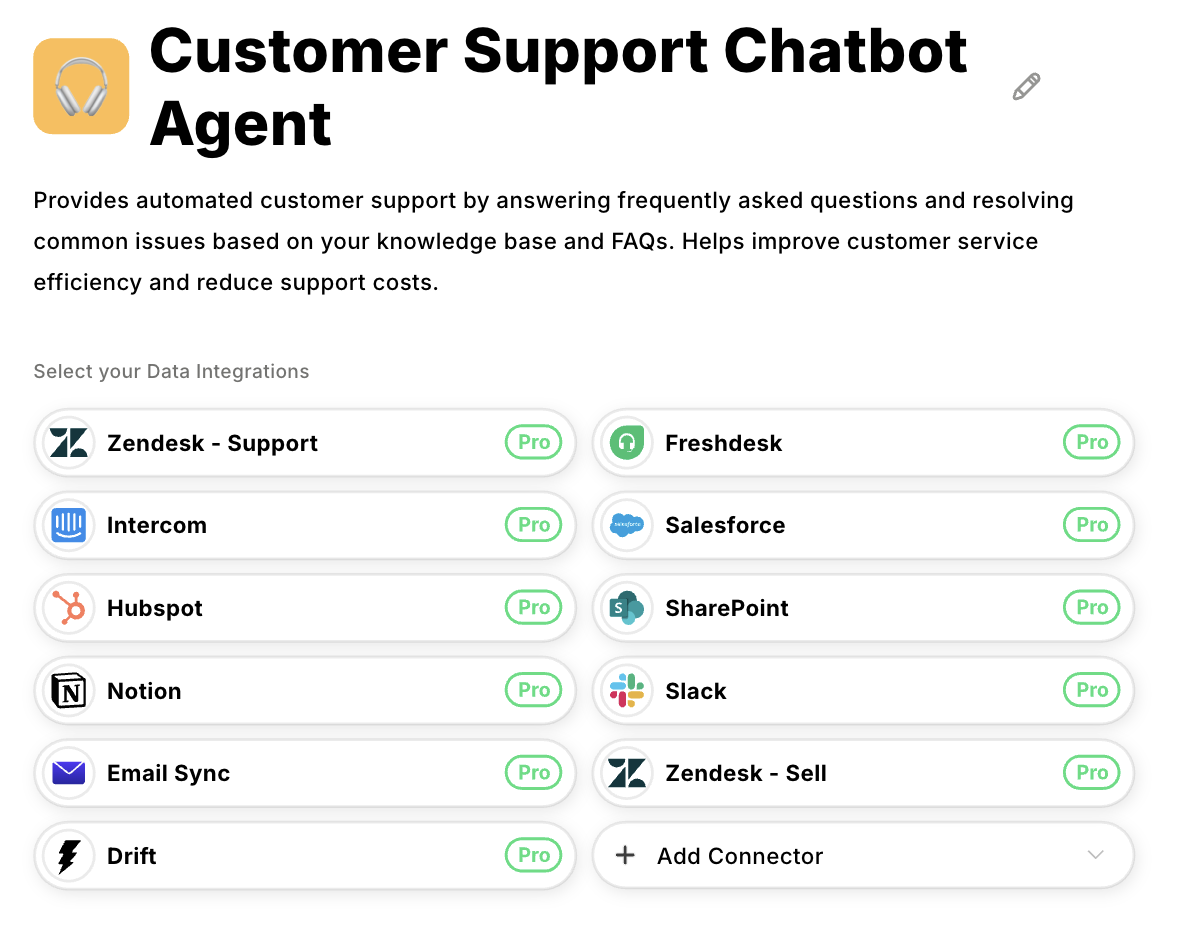

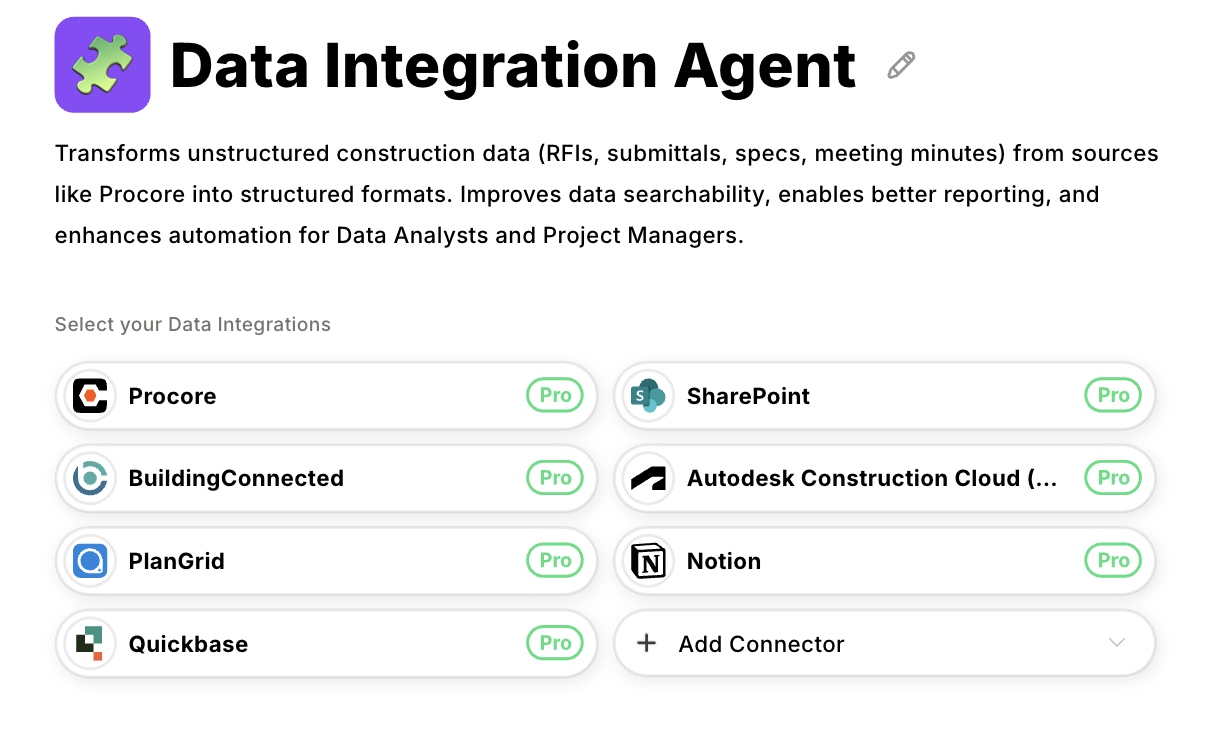

This data aggregation problem requires automation. Datagrid's AI agents integrate with 100+ data sources, including CRMs, document repositories, and project management tools. They pull data from multiple systems, work that normally takes hours of manual platform hopping."

Best Practice #2: Enable Continuous Score Updates

Manual calculation creates a frustrating cycle. Initial calculation takes days of work. Those scores become outdated while you're still finishing the last accounts. So teams settle for quarterly reviews instead of continuous monitoring.

By the time you recalculate three months later, the accounts that changed most are the ones you've already missed.

Automation breaks this pattern entirely. Automated systems pull metrics from all platforms, usage data, support tickets, billing status, and engagement metrics.

No more manual exports. No more spreadsheet updates. Health scores recalculate automatically as data changes. Account health shifts are visible immediately instead of sitting invisible for 3 months.

The capacity impact is real. CSMs managing 50 accounts manually can scale to 150+ accounts with automated scoring. Time that previously went to data gathering now goes to actual customer conversations. Portfolio growth no longer requires proportional headcount increases.

Here's what makes continuous scoring valuable. Take into account a slow decline over three months. Quarterly snapshots miss this pattern completely; each check looks stable. Continuous scoring catches the downward trend early, while you still have time to intervene.

Continuous recalculation requires automation. Datagrid's agents pull metrics continuously from connected data sources. As data updates, new support tickets, usage changes, engagement shifts, and health scores change, they are reflected automatically.

Best Practice #3: Integrate Historical Context

Health scores show the current account status. Historical context explains why those scores look the way they do. Customer history (past commitments, interaction patterns, support trends) provides the context that turns scores into actionable intelligence.

The problem is that historical context lives scattered across systems. Contract terms sit in DocuSign. Custom commitments exist in email threads and meeting notes. Support ticket history spans months or years.

Communication records are spread across shared drives, Google Docs, and project management tools. Accessing this context requires hours of manual document hunting.

Finding contract context takes time. You search DocuSign for signed agreements. Check shared drives for SOWs. Scan through old meeting notes for verbal commitments.

Multi-year customers make it worse. Initial agreements get amended, expansions add terms, and custom arrangements get documented in scattered emails. Contract history spans dozens of files across different systems.

Communication context requires similar effort. Customer relationships generate documentation at every stage: kickoff calls, quarterly reviews, support escalations, expansion discussions, and executive check-ins.

Each creates records like meeting notes, follow-up emails, shared documents, and action items. Comprehensive review takes 1-2 hours per account.

Without easy access to context, health scores show symptoms without explanation. Low engagement score —Is this normal for this customer type, or a recent change? Support ticket volume is increasing compared to the baseline —rising payment delays: first-time or recurring pattern?

Context turns health scores into a diagnosis. You're not just seeing "score dropped 15 points." You understand why based on contract commitments, past interaction patterns, and support history. Decisions improve when scores are accompanied by context.

Datagrid's AI agents process documentation across systems, including contracts, SOWs, meeting notes, and shared files. They compile context automatically. Hours of document hunting per account are handled automatically.

Best Practice #4: Handle Support Ticket Volume at Scale

Support tickets signal early warning signs of account health. Frustration patterns are developing. Issues are recurring across contacts. Customer tone shifting. Manual ticket review catches these patterns, but portfolio volume makes comprehensive analysis impossible.

Manual analysis takes hours. You're reading through each ticket, noting recurring issues, tracking sentiment changes. Found three tickets about the same problem in two weeks? That pattern matters.

Are responses getting shorter and more frustrated? Warning sign worth noting. Catching these signals means reviewing every ticket for every account individually.

Here's the volume challenge. Managing 200 accounts with 10-15 monthly tickets each creates 2,000-3,000 tickets to review.

Support teams resolve urgent individual tickets. Aggregate pattern analysis? Doesn't happen at that scale. Three medium-priority tickets in two weeks signal building frustration, but tickets get handled one at a time without account-level context.

Issues surface late without pattern analysis. Customer frustration shows up in renewal discussions rather than weeks earlier through ticket trends. Full support context isn't available because reviewing months of history manually before every customer conversation isn't scalable.

This volume problem requires automated pattern recognition. Datagrid's AI agents automatically process support documentation across your portfolio. The ticket-by-ticket analysis work happens without manual review.

Best Practice #5: Cross-Reference Data for Accuracy and Validation

Health scores are only as good as the data feeding them. When customer data lives across multiple systems, conflicts emerge. Support tickets suggest one thing. Usage data shows another. Contract records don't match CRM notes.

These discrepancies create false positives (healthy accounts flagged as at-risk) and false negatives (real problems missed).

Manual cross-referencing takes time you don't have. Notice a low engagement score? You need to verify. Check CRM logs, review calendar history, scan email patterns, and compare contract terms. One account takes 30-45 minutes. Across hundreds of accounts? Impossible.

Data conflicts go unnoticed until they create problems. The customer flagged as low engagement actually participates through untracked channels. Contract requires quarterly reviews, but engagement scoring doesn't reflect that.

Support tickets mention feature requests missing from the roadmap tracking. These mismatches distort scores and waste resources investigating false alarms while real issues get overlooked.

Cross-referencing validates data before it impacts decisions. Catch conflicts between contract commitments and actual engagement. Spot discrepancies between support patterns and usage trends. Accurate data leads to accurate scoring.

This validation problem requires automated cross-referencing. Datagrid's AI agents cross-reference information across document types and data sources.

They identify discrepancies between systems without manual work. Contract commitments versus actual engagement. Support patterns versus usage trends. Hours of validation happen automatically.

Turn Hours of Data Gathering Into Minutes with AI Agents

Manual data gathering limits everything else. Your team can't track health scores continuously because compiling data takes hours per account. Support ticket review takes time.

Contract context requires document scanning. Communication history needs manual searching. You're choosing between thorough analysis and portfolio scale; customer success requires both.

Datagrid's AI agents handle these data-gathering bottlenecks.

- Integrate with 100+ data sources: Connect to CRMs, document repositories, and project management tools. Manual health data compilation that takes days happens automatically across your portfolio.

- Extract information from support tickets: Process support ticket documentation across accounts without manual ticket-by-ticket review. Work that takes 30-45 minutes per account is done automatically.

- Access contract documentation instantly: Search through contracts, SOWs, and amendment history. Hours of document scanning per renewal eliminated. Enter conversations with complete context.

- Compile meeting documentation: Process meeting notes and shared files across your systems. Relationship context is compiled automatically instead of manual searching.

- Handle larger portfolios: Teams managing 50-75 accounts manually scale to 150+ with automated data processing. Capacity is no longer limited by manual compilation work.

Ready to automate customer health score tracking?