Learn what RFPs are, how evaluation works, and how AI agents automate requirements extraction for project managers responding to construction RFPs.

When you're managing RFP responses for construction projects and professional services contracts, manual requirements extraction creates delays that compound into errors and lost opportunities.

While you're spending days building a compliance matrix for one solicitation, three more arrive. Miss a buried compliance requirement during rushed extraction, and your proposal gets disqualified regardless of your technical capability.

The challenge isn't any single RFP; it's managing several simultaneously under deadline pressure. Manual document processing doesn't just slow you down; it forces you to decline winnable work because you lack the time to extract the requirements.

What is an RFP (Request for Proposal)?

An RFP is a formal procurement document that organizations use to solicit competitive bids from vendors for specific projects or services. It's the buyer's way of saying, "Here's exactly what we need—show us why you're the best choice to deliver it."

The RFP serves as both an invitation and a rulebook. Organizations publish their requirements, evaluation criteria, and submission guidelines so every vendor competes on an equal footing with access to identical information.

Once a contract is awarded, the executed contract—often informed by the RFP—becomes the legally binding baseline for measuring project success and vendor performance.

Think of it as structured procurement: instead of informal conversations or relationship-based decisions, the RFP forces buyers to document every expectation—project scope, technical specifications, delivery timelines, budget parameters, compliance requirements—in writing.

This documentation protects both parties. Vendors know exactly what they're committing to deliver, and buyers have clear grounds for holding vendors accountable.

The transparency built into RFPs serves three critical purposes. First, it encourages genuine competition. When requirements and scoring criteria are public, the best solution wins, not the best relationship.

Second, it helps buyers compare wildly different approaches side by side using standardized criteria. Third, it creates accountability once contracts are signed, giving both parties a reference point for resolving disputes or measuring performance.

What is the Difference Between an RFP and an RFQ?

RFQs are shopping lists. You know what you need, specific materials, quantities, specs. A construction firm ordering rebar sends an RFQ: grade 60 steel, 50 tons, delivery by March 15th. Suppliers compete on price for identical requirements. Fastest quote wins.

RFPs are different. The buyer describes the problem, not the solution. A city needs bridge rehabilitation, but doesn't dictate the engineering approach. Contractors propose their own methods, epoxy injection, carbon fiber wrapping, and section replacement. The technical approach matters as much as the cost. Maybe more.

The distinction changes how you respond. RFQs need pricing for defined deliverables. RFPs need a strategy, how you'll solve the problem, why your approach works, and proof that you can execute. One's transactional. The other is consultative.

What Components are in an RFP Document?

RFPs follow a standard structure across six sections. Understanding what's in each section helps you extract requirements faster.

- Introduction: Reveals client pain points; quote it when articulating your value

- Project scope and requirements: Defines must-haves you need to map one-to-one with your capabilities

- Deliverables and timelines: Lets you flag risks early and propose realistic schedules

- Evaluation criteria: Shows how points get awarded, directing where to emphasize your strengths

- Submission guidelines: Dictates compliance checks, wrong font, wrong file name, or late upload kills even the best proposal before evaluation starts

This sequence mirrors procurement decision-making. Organizations establish context first, define their needs, set expectations, explain how they'll evaluate, dictate submission mechanics, and outline binding terms.

Government and private sector RFPs may organize these sections differently, but they all cover the same ground.

Where are RFPs Used?

RFPs are used across several industries:

- Public infrastructure projects: Government RFPs for roads, bridges, utilities, and facilities with strict FAR compliance, bonding requirements, and lengthy technical specifications

- Commercial construction contracts: Private sector RFPs for office buildings, retail centers, and industrial facilities emphasizing experience, references, and methodology

- Design-build proposals: Combined design and construction RFPs requiring coordination across architecture, engineering, and execution phases with value engineering and lifecycle analysis

- Professional services consulting: RFPs for engineering, architecture, and technical advisory services emphasizing team qualifications and past performance over technical specs

RFPs show up across construction, engineering, and professional services, or anywhere organizations need competitive bids for complex projects:

How Do Organizations Score and Select Winning Proposals?

Once you submit your proposal, evaluators follow a structured process to score submissions, compare vendors, and select a winner..

Most scoring templates group points into five categories, though the weight of each shifts by industry and project type:

- Technical capability: Requirements compliance, integration fit, security posture

- Cost: Total cost of ownership, not just sticker price

- Vendor qualifications: Similar-project experience, references, and financial health

- Implementation approach: Project plan, risk mitigation tactics, staffing model

- Ongoing support: SLAs, training, escalation paths

First, evaluators screen for mechanical compliance, correct file format, on-time submission, required signatures, and page limits. Proposals with mechanical errors get rejected before anyone reads the content. It's the fastest way to fail.

Second comes mandatory requirements verification. Words like "shall," "must," and "required" signal pass/fail checkpoints. Miss even one item and your proposal will be automatically rejected.

This compliance check happens before scoring of the five categories above, regardless of how strong your technical approach is.

Third, proposals that pass both checkpoints get scored competitively. Evaluators assess each of the five categories and multiply the raw points by their respective weight percentages. High-weight criteria control final rankings.

If technical capability carries 40% weight and cost carries 20%, your technical section matters twice as much.

6 Best Practices for Responding to RFPs

Winning RFP responses require speed without sacrificing accuracy. Miss one buried requirement and weeks of work get rejected before evaluation. These six practices help you move faster without the errors that cause disqualification.

Best Practice #1: Automate Requirements Extraction

Requirements extraction consumes days per RFP and blocks all downstream work. Two problems create this bottleneck: requirements get missed because they're buried across documents, and sequential processing limits proposal capacity.

RFP requirements scatter everywhere. Insurance certificates in Appendix C. Bonding thresholds in Section K. Technical certifications in annexes that the table of contents never mentions.

You're reading the document multiple times, cross-referencing between sections, hoping you caught everything. Miss one buried requirement, and your proposal gets rejected before evaluation.

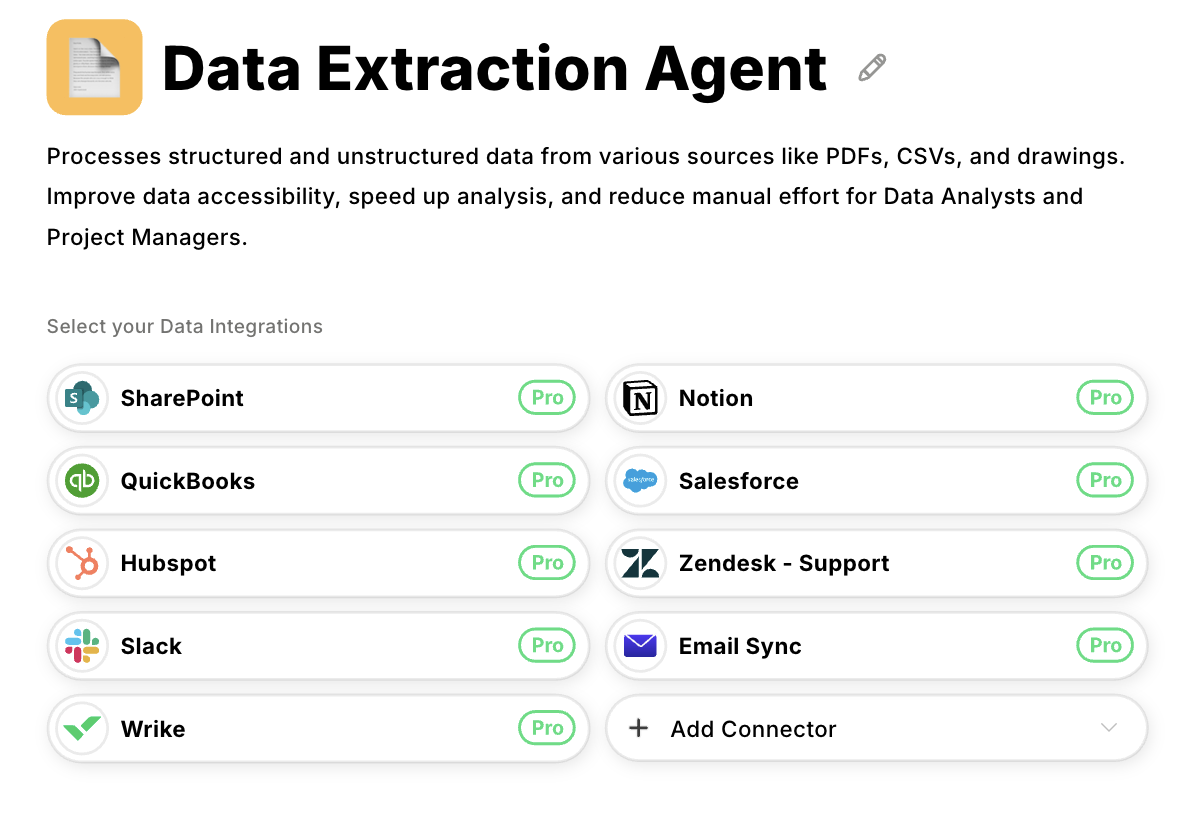

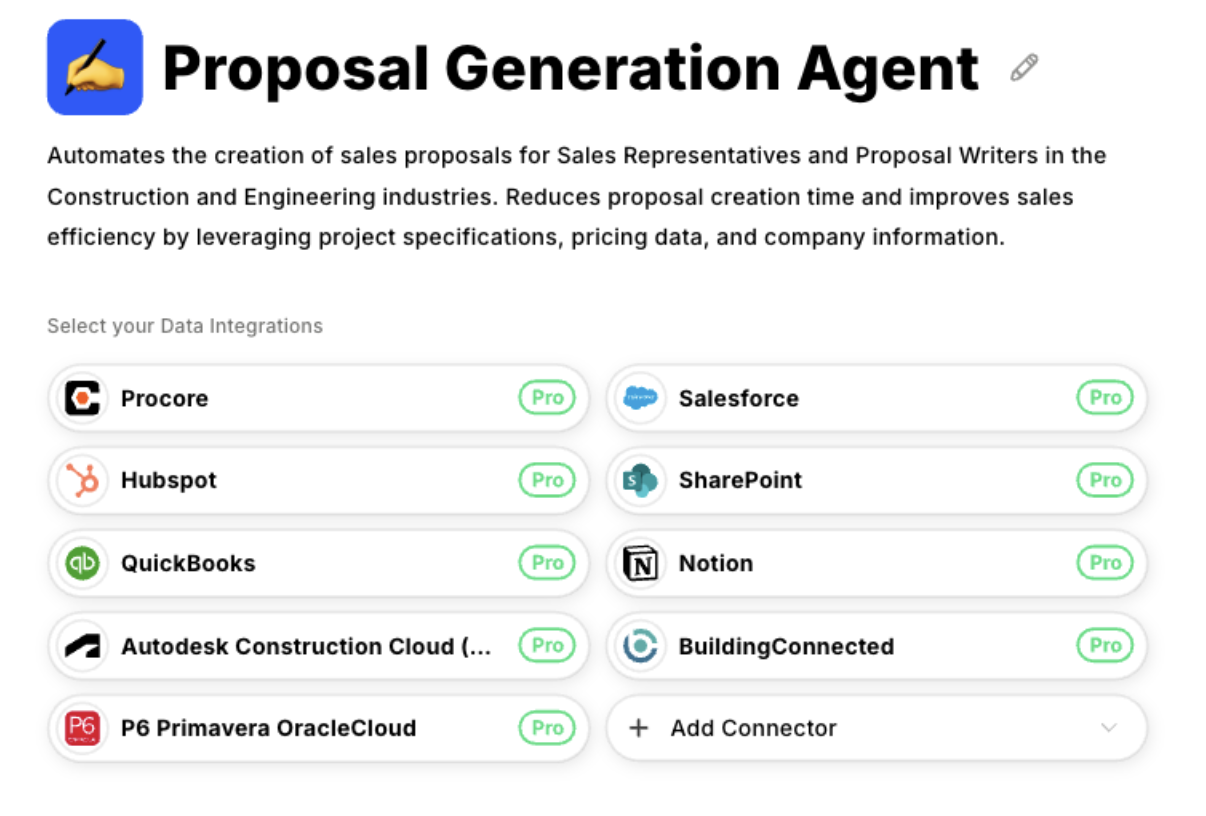

This is where AI agents help. Datagrid's agents scan entire documents and identify every mandatory requirement regardless of location. You get structured lists immediately—pass/fail items, evaluation criteria, compliance checkpoints. Nothing gets missed.

The second constraint is capacity. Each RFP needs extraction before drafting starts. You can't work on Proposal B while reading Proposal A. Five proposals means processing them sequentially.

That's weeks of extraction while deadlines pass. You decline opportunities or rush extraction and risk missing requirements.

Agents change this. They process multiple documents simultaneously. Your team drafts one RFP while agents extract three others in parallel. A week of manual work becomes hours.

Teams that handled three RFPs per month can pursue ten because extraction no longer limits capacity.

Automation handles the mechanical bottleneck. The practices below address what humans still need to manage.

Best Practice #2: Maintain Your Requirements Matrix

Once agents extract requirements into structured lists, maintain this tracking system throughout the response cycle. Assign owners to each requirement. Track completion status. Your matrix becomes the coordination hub for the entire proposal team.

When amendments arrive, agents update the matrix automatically, but you still need to notify section owners about changes that affect their work. A revised bonding threshold or new technical certification requirement means someone's section needs updating; your job is ensuring they know about it.

Cross-reference requirements that appear in multiple sections. Evaluators check these most carefully because they reveal whether you understand how different requirements connect.

If pricing references technical specifications, and the technical sections reference compliance items, your matrix should clearly show these relationships. Keep status current so everyone knows what's addressed and what's still pending. Writers need to see which requirements their sections must cover.

Reviewers need to verify that every requirement has been addressed somewhere. The matrix is your single source of truth from extraction through final submission.

Best Practice #3: Participate Actively in the Q&A Period

Most teams skip Q&A periods because they're racing to draft responses. This costs them later when unclear specifications become fatal assumptions. Draft questions as soon as you spot ambiguity—waiting until late in the cycle means answers may arrive too late to adjust your approach.

Monitor all public Q&A responses, not just answers to your questions. Other vendors often surface interpretation issues you overlooked. Treat Q&A responses as requirement updates that modify your technical approach.

Teams that incorporate clarifications early build aligned proposals. Teams that treat Q&A as optional context often submit responses that technically answer the original question but miss what the issuer actually needs.

Best Practice #4: Mirror Their Evaluation Structure Explicitly

Evaluators work from scoring rubrics with specific criteria in specific sequences. When your proposal mirrors their structure—matching section headers, answering in their order, using their evaluation language—you make scoring straightforward.

When your structure diverges, evaluators hunt for information, miss your strongest points, and score you lower.

For each criterion, provide explicit evidence linked directly to that requirement: performance metrics from comparable projects, relevant case studies, and current certifications.

Generic capability statements buried in narrative paragraphs don't map to scoring rubrics. Specific, well-signposted evidence does.

Best Practice #5: Avoid Last-Minute Scrambles That Compromise Quality

RFP responses need input from technical teams, pricing, legal, compliance, and operations. The typical failure pattern: engaging these teams too late, then rushing their contributions during the final week.

This produces proposals with misaligned technical and pricing sections, inconsistent terminology, and gaps that surface during final review when there's no time to fix them.

Assign clear section ownership when you decide to bid. Set internal deadlines that account for realistic review cycles. Use shared tracking that shows progress and blockers to everyone at the same time.

Early coordination with realistic timelines prevents the weekend fire drills that burn out teams and compromise proposal quality.

Best Practice #6: Conduct a Final Compliance Audit

This is your last defense against disqualification. Have someone who didn't draft the proposal trace every matrix requirement to its location in your final document.

Fresh eyes catch what authors miss: requirements addressed in the wrong section, claims made without supporting evidence, and mandatory attachments that didn't get attached.

Check all mechanical requirements that trigger automatic disqualification: file formats, page limits, naming conventions, and required signatures.

Use the issuer's evaluation checklist if they provide one; it shows exactly what they'll verify first. This systematic verification eliminates the "lost on a technicality" post-mortems that waste otherwise strong proposals.

Automate Your RFP Response Process With AI Agents

Requirements extraction creates the bottleneck that determines how many proposals you can pursue. When extraction takes days per solicitation, you're forced to choose between declining winnable opportunities or rushing the analysis and missing mandatory requirements.

Datagrid creates AI agents that handle this bottleneck.

- Process multiple RFPs simultaneously: Extract requirements from several documents in parallel while your team drafts responses, eliminating the sequential queue that forces you to decline opportunities.

- Complete extraction in hours instead of days: What takes a week of manual reading finishes automatically in hours. Your team starts drafting with immediate, complete visibility into every requirement.

- Identify every buried requirement: Agents scan entire documents and surface all mandatory items, evaluation criteria, and compliance checkpoints regardless of location. No more missed requirements that trigger disqualification.

- Expand proposal capacity without adding staff: Teams that handled 3 RFPs per month can handle 10+ because extraction no longer constrains capacity.

Ready to deploy AI agents that transform your RFP response process?